TrainXGB - Train XGBoost in Browser

The simplest way to train an XGBoost model in GUI right in your browser

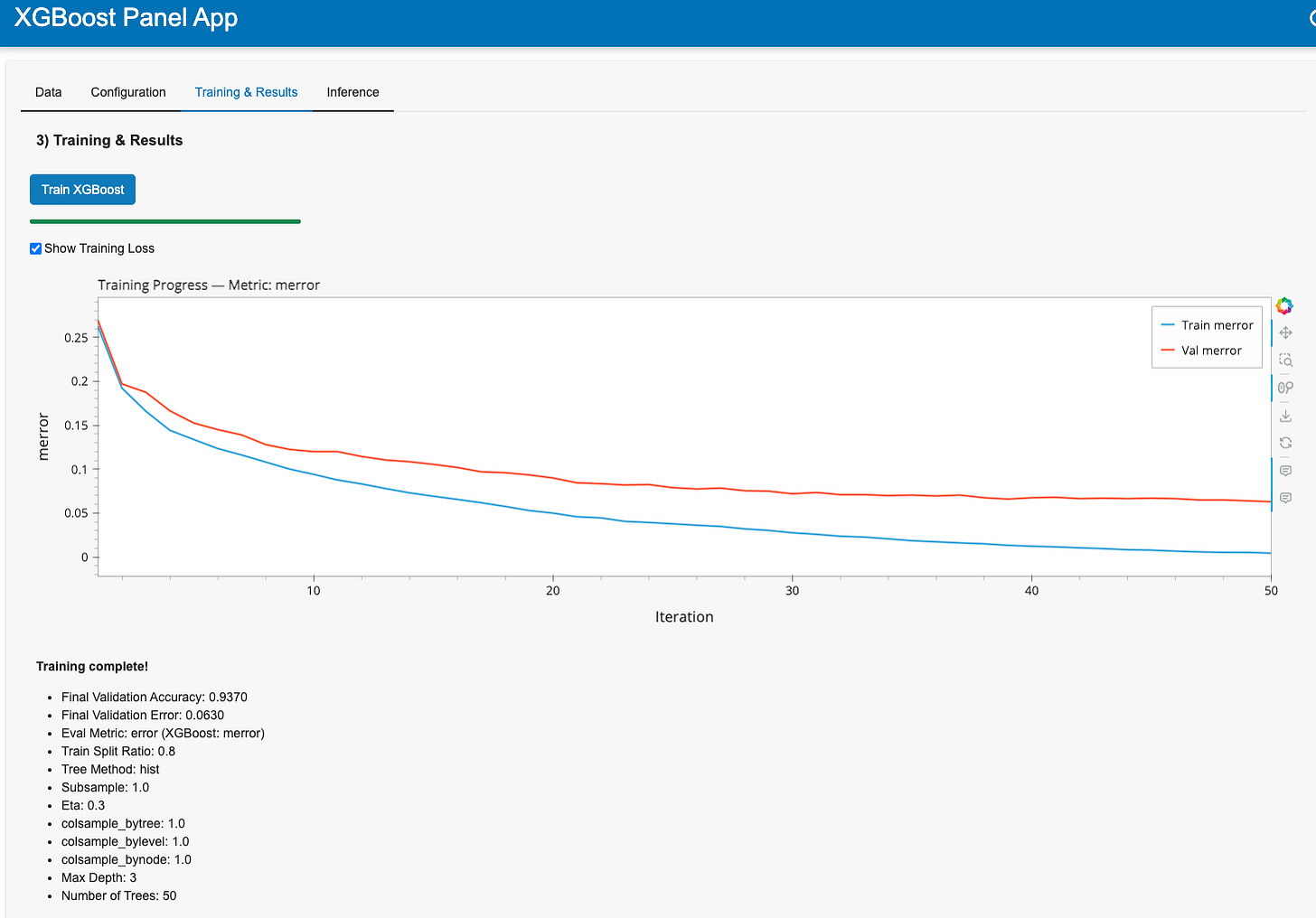

I can finally share a bit about one of the small projects that I’ve been working on recently - www.trainxgb.com. It’s an in-browser app built from scratch with Panel and PyScript. It allows you to train an XGBoost model using a simple GUI and leverages the powerful WebAssembly (WASM) in-browser compute environment. Just upload your CSV data file, choose the appropriate hyperparameters, and press the “Train XGBoost” button. No coding skills required, no account creation necessary.

This is just the first iteration of this app that includes more or less all the features that I wanted it to have. It will likely be pretty buggy, and potentially unstable. I’ve tried to test it as much as possible, and for the most part it worked as I’d wanted it to. I’ve tested it on Safari and Chrome on Macs and iPad, Firefox on Ubuntu, and Edge on Windows. It worked on all of those devices. The app is a bit too big to run on your cell phone, but it might be possible to optimize it in the next few iterations. It should ideally be used with small-ish datasets, the kinds that you usually see in most Data Science and tabular data use cases. I’ve tested it with the datasets up to 100 MB, and it worked fine. I’ve used it on MNIST. It finished in a minute. I got 98% accuracy. Go ahead, just give it a try.

I first started fiddling with something like this app a few years ago when PyScript first came out. I was able to create a *very* rudimentary app that just trained on a single built-in dataset. Just the most basic proof of concept. At the time I had wanted to build something more powerful, but I cold not get any buy-in for it. Various other professional and personal circumstances had prevented me from pursuing this project until now.

Another big obstacle was that all the technologies used for this project are relatively new and obscure, and I am not a developer. Even just a year ago it would have been a very steep development cycle to get a project like this one going. Fortunately, the AI coding assistants have tremendously grown in power over the last few months. For most of this project I had used the help of OpenAI’s o1-pro model. As I had mentioned, all the technologies in my stack are relatively nonstandard, and many AI assistants are not able to easily get a working version of the app on the first try. At one point I had tried to martial Claude to rewrite and streamline an early version of the app, but it decided that what I really needed was to have the whole app be rewritten in JavaScript, and run the XGBoost training in the backend. (!!!) When Grok 3 came out it gave me a pretty decent output as well, but by then I had already been committed to o1-pro, and just decided to stick with it.

In the future posts I may write a bit more about this whole process, and the tech stack I had used, but for now I’d just like to have you play with the app, and provide me with all the constructive feedback you have.