Part 1: Revolutionising the Onboarding Journey for Data Providers and Consumers

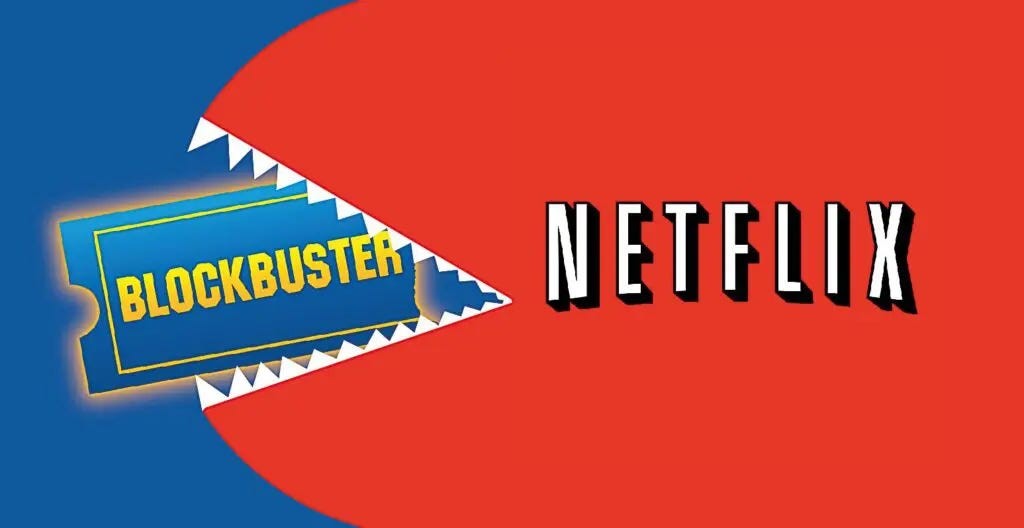

If you were a 90s kid like me, it’s likely your Saturday night would look a bit like this:

Ask your Mum/Dad to drive you to the local Blockbusters store

Browse the shelves to find your VHS of choice and hoping they still have a copy (American Pie if memory serves me correctly).

Queue up with all the other folks to pay and ensure you marked down the return date.

3 days later drive back down and post it through the returns box to avoid any late fees.

Now this process you can assume repeated itself until around the mid 2000s when Netflix released it’s streaming service. Suddenly, rather than the limited selection, extended turnaround process and late fees, as a consumer you could login to your Netflix account and browse through thousands of films and TV shows available on demand at the click of a button.

The Data Industry Status Quo

The data industry today is a fascinating dichotomy whereby on one hand providers are churning out high quality, and in many cases AI/ML powered solutions that deliver real time insight. However for many, their primary mechanism for delivering that to customers is still deployed via FTP - a technology approaching it’s 53rd birthday. Now whilst FTP has it’s merits and has stood the test of time, there are a number of factors that constrain it’s users:

The ETL (Extract, Transform, Load) process - It’s time consuming, cumbersome and requires multiple technical stakeholders in order to operate effectively - functions that not all orgainsations have the resources, budgets or knowledge internally to support. This is without considering that many consumers are working with multiple sources meaning that these processes are repeated for every dataset organisations are utilising. One customer noted that onboarding time for new providers is anywhere between 3-6 months given the resources required to successfully integrate the data before testing can even commence.

Scalability - We’ve found that many consumers are creating multiple data copies to support the needs of geographically dispersed teams and varying organisational functions. This of course creates governance challenges around data consistency, accuracy and lineage.

Data Volume - We’ve worked with providers over the past 12 months who deal in significant data volume. This may for example be geospatial providers offering insight down to lat/long level or financial services organisations delivering up to 800TB of equity trading data. With this data being delivered in raw format, processing capacity and time to value are factors that limit a consumer’s ability to effectively work with this data in a timely manner.

Consumers more and more are looking to work with external data as a means to deliver business insight, manage risk and support growth objectives. Yet as noted by Forrester, more than 70% of a firm’s time is spent sourcing, processing and integrating that data - functions that add nothing to their bottom line and are undertaken prior to any level of value determination. If providers are able to help lower the TCO (total cost of ownership) associated with the technical onboarding journey, it can support an accelerated sales cycle and more importantly deliver a higher ROI (return on investment) to customers.

The New Data Onboarding Journey

The concept of Data Sharing is relatively new in nature but in essence is the foundational technology that supports the provider consumer relationship without the need for an extensive technical onboarding process. Customers can be up and running in either a testing/production environment within minutes vs months.

Data sharing requires no ETL - it is made available to the consumer in relational database format directly mounted within their account. The data is always live and ready to query - customers are not required to load data or manage any form of update schedules/maintenance on the data files. This frees up technical resources for higher value added functions.

The data is a virtual instance of what the provider is making available meaning that there is no requirement for duplication across teams/geographies and never stale. All users are able to view the same data instance in accordance with the governance controls imposed. This ensures firms are working with a single source of truth throughout the enterprise.

No physical movement of the data takes place meaning the customer has no need to store the data. This makes access to large datasets and multiple sources more readily available and with much lower operational costs.

Thinking about the Netflix parallel, it was 2007 when they launched their streaming service to the world. This revolutionised a user’s ability to source, test and consume content like never before. When I look at my experience over the past 12 years in the data industry and more importantly last 12 months at Snowflake, providers and consumers have wised up to the costs and time associated with traditional data integration and are now looking to take advantage of that same ‘click-and-go’ functionality Netflix pioneered back in 2007.

Watch out for part 2 next week when I’ll be discussing the ways in which we’ve seen alternative data providers build market share across key industries and deliver improved time to value for customers.