“There’s a very high likelihood that somehow, someway, at some point in the 2020 election, deepfakes will play some sort of role,” said Josh Ginsberg, the CEO and founder of Zignal Labs, a San Francisco-based media analytics platform that works with presidential campaigns. “If we don’t know where it is going to play a role, the question is how do we collectively respond to that?”

Ginsberg knows; he’s served on three presidential campaigns, specializing in crisis management—rapidly identifying and mitigating reputational risks and informing strategic decisions.

SEE ALSO: Why AI Deepfakes Should Scare the Living Bejeezus Out of You

Needless to say, deepfakes have now evolved into the reputational risk category.

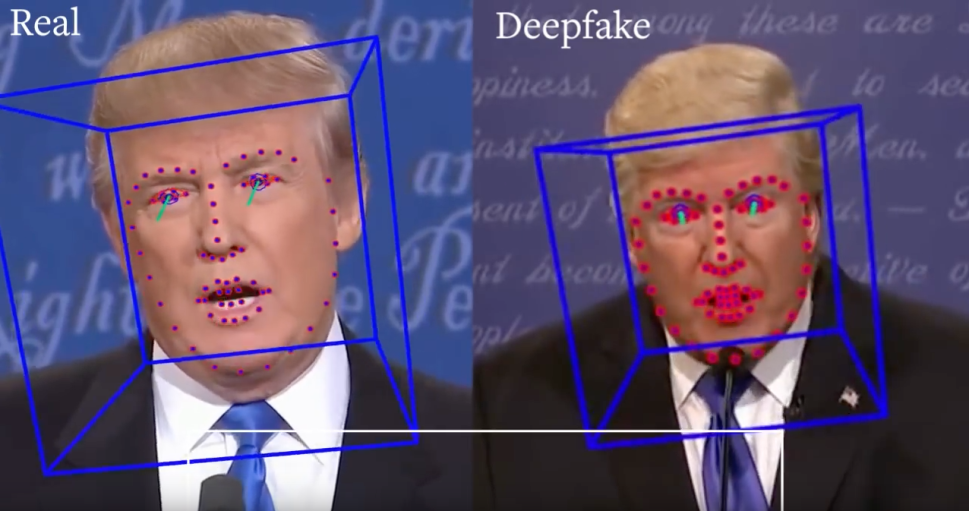

In simplistic terms, a deepfake is like a digital puppet that uses machine learning and 3D models of a target’s face, which allows you to manipulate a person in a video, as well as edit and change what they say. It gets better: These changes appear to have a seamless audio-visual flow without jump cuts.

Why would anyone possibly want to use this for the benefit of evil?

When deepfakes first came on to Ginsberg’s radar, in 2017, he didn’t think of it as something that could possibly impact a presidential election. After all, back in those archaic days, deepfakes were largely used to swap Nicholas Cage’s face into random movie scenes.

But this is the world of tech.

“I did not necessarily think it would get to the point of technology that it’s at this quickly,” Ginsberg told Observer. “By that, I mean the ability for really anyone to be able to create a deepfake for $20 on their home computer that could literally shift the narrative in a presidential election is really quite scary.”

“And that is the reality that we’re looking at right now,” he added.

This could make the 2020 presidential election, which is shaping up to be a very dirty election, even dirtier.

In pre-deepfake days, when Ginsberg took on a presidential campaign, he had to deal with defusing opposition research contained, say, in a video that was pushed out on YouTube, and would go crazy viral and shared on social media. Ah, yes, the innocent days of 2016.

“What is unique and different about deepfakes is the really good ones are really difficult to verify,” Ginsberg explained. “That’s where you’re in some uncharted territory.”

Russian bots are now old school in the world of misinformation.

Imagine a manufactured October surprise that goes far beyond the shock of Trump’s Access Hollywood video. (Though, that real horrendous video had zero impact in regards to keeping Trump out of the White House.) It’s frightening to think of manipulated words put into a politician’s mouth—with a video that could go viral in a matter of minutes.

And Congress is freaking out.

Earlier this month, the House Intelligence Committee held it’s first hearing on the matter. Intelligence Committee Chairman Adam Schiff (D-Calif.), called deepfakes a nightmarish threat to the 2020 presidential election that could leave voters “struggling to discern what is real and what is fake.”

At the hearing, Schiff said: “Now is the time for social media companies to put in place policies to protect users from misinformation, not in 2021 after viral deepfakes have polluted the 2020 elections.”

Ginsberg pointed out that candidates are already on guard.

“The more sophisticated campaigns. Absolutely,” he stated. “We’re working with a number of presidential campaigns right now, and we bring in every single mention of a candidate about competitors, about issues, the split-second it happens.”

Zignal Labs is also coordinating with a number of academic institutions and researchers to try to find the best technological advances to detect deepfakes.

At UC Berkeley, computer-science professor Hany Farid is developing a deepfake detection system that analyzes videos and tracks, subtle facial expressions, gestures and head movements that are distinct to that individual.

When put into place, media outlets could then upload videos, run an analysis and then determine if the video is a deepfake or not.

Happy ending to the deepfake story right?

Except… “We are outgunned,” Farid told The Washington Post. “The number of people working on the video-synthesis side, as opposed to the detector side, is 100 to 1…”

Plus, there’s another drawback. “That’s step one—to be able to do it in a research environment,” explained Ginsberg. “Being able to do it at scale as millions or billions of mentions are happening within split-seconds are two different things.”

True. Mix that with how rapid a video can go viral these days.

Look at the outrage that was sparked when the fake (not deep) Nancy Pelosi drunk video dropped. That video got roughly three million views after being shared across social media. And once it’s out there, the damage could already be done.

“That’s what makes the deepfake problem so scary,” Ginsburg said. “It does put these campaigns in a situation where some folks saw it before they knew anything… and gives them a certain perception.”

Another troubleshooting method for Zignal Labs is monitoring how news or information spreads about their candidates—and calling out deepfake videos before they can go viral.

“You’re able to start doing things like thresholds of alerts as stories, as messages are beginning to accelerate; campaigns can get alerted to those things,” said Ginsberg.

Previously, the old way to monitor a campaign involved staff members in a backroom with a wall of televisions, constantly doing Google News searches to see what new stories were popping up about a candidate, and then tracking whether the stories were moving, trending, and either positive or negative.

“Zignal Labs basically is designed to do that with technology in a much, much more efficient manner,” said Ginsberg about the new advancements. “We’re bringing in every single publicly available media data point from social media, traditional media and television, showing it in a very easy to use dashboard in real time. So you can see every single mention as it’s coming in and how it’s spreading throughout the media spectrum.”

The crisis management mode for deepfakes is to then respond and correct the record as quickly as possible.

“Step number one, situational awareness,” explained Ginsberg. “You need to get an alert the split-second that a deepfake video drops. Step two for a campaign is to verify if it’s true or if it’s not.”

For a more obvious deepfake videos—say a candidate doing something absurd—that’s easy. But there could also be a very subtle usage of deepfake videos, such as manipulating a policy statement from 20 years ago, which could take a campaign a bit of time to verify. Thus, an internal apparatus and mechanisms must already be put in place to quickly bury the doctored video and correct the record by showing the original version to prove the other is a fake.

“So that’s when you move more into crisis communication, you move into a place of being proactive and getting the news and information, that, hey, this is the fake, this is not real,” said Ginsberg. “Look at what the actual video shows—that’s important. Video, images and audio will diffuse it faster than anything.”

Since we’re in the early Wild West era of deepfakes, it’s unclear what type of legal action could be taken on a deepfake video. For example, what if The Daily Show uses a deepfake as a joke?

“I’m not sure we know yet how some of that stuff is going to play out,” said Ginsberg. “Where’s the line between that and you know, other ways deepfakes can be used and how that’s pushed around online?”

Alhough we’re already seeing hearings over the matter, last year when Zuckerberg testified in front of Congress, he had to spend a lot of time explaining the concept of the internet to a bunch of crusty old white guys.

Is that who we’re expecting to create this new and critical legal framework?

Also, there’s the matter of censorship. Will a person be able to create a deepfake video of a politician for the sole intent of being funny and sharing it with your friends, or will all deepfakes of politicians be considered off-limits and banned from Facebook?

“There’s an education process for everybody on these things,” said Ginsberg. “I think you see the platform starting to step up in ways and defining the rules for how this works. It’s challenging because where does free speech stop? And then, they have to make these sorts of decisions.”

In this fork-in-the-road moment, Ginsberg feels that a key component is for the public to educate itself as a society on detecting deepfakes.

“There does have to be a certain level of vigilance amongst voters to see if it’s credible or not,” he said. “This is a new world and campaigns are going to need to adapt. The media is going to need to adapt, voters are going to need to adapt… a lot has happened in a relatively short period of time.”

Ginsberg’s hope is that we’re going to figure out how to handle deepfakes during this election cycle.

“But look, it’s going to play a role,” he concluded.