Understanding Spark Connect (Reynold Xin’s keynote on Data+AI Summit 2022)

Origin: https://coderstan.com/2022/07/02/spark-connect-reynold-xins-keynote-dataai-summit-2022/

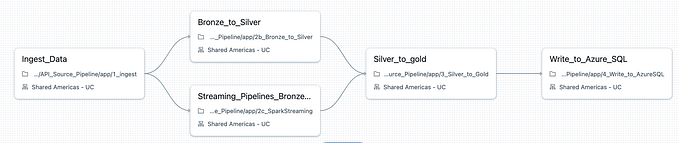

Reynold gave a great intro about some recent updates in Spark community along with some exciting new features. Here I am going to take a stab on explaining a new feature called “Spark Connect”.

What is “Spark Connect” in short?

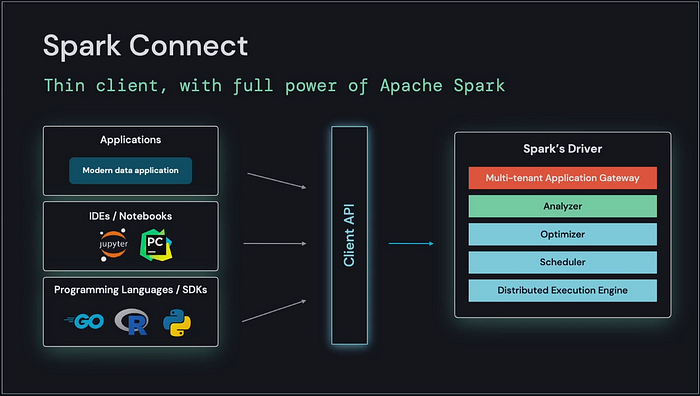

“Spark Connect” creates a “thin client” to enable Spark query capability on low-compute devices, with re-architected Spark Driver to get around some short-comings in the Monolithic Driver and better support for multi-tenancy.

What should you use Spark Connect for?

- Run Spark jobs from your app or low-compute devices.

- Better experience on your multi-tenancy clusters

- Less OOM: Manage your Spark job memory at client to provide explicit resource allocation.

- Avoid dependency conflicts: Dependency defines in the client for each application.

- Decoupled upgradability: Decouple Spark server and client version updates.

- Powerful debuggability: Step-through debugging feature.

- Better observability: Access logs/metrics in the client (instead of viewing from a centralized log storage)

My take on Spark Connect

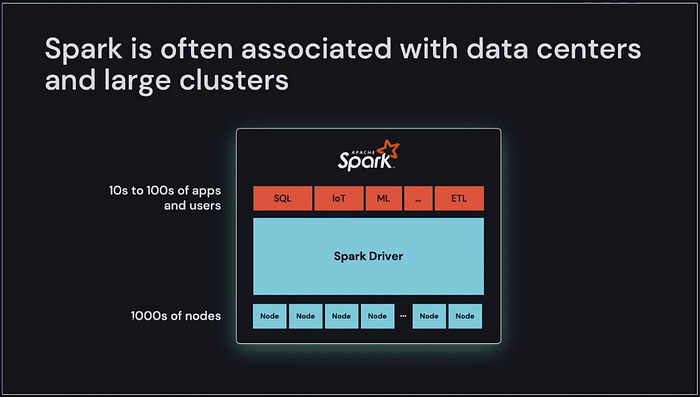

Overall, I think Spark Connect addressed the isolation and asynchronization part of the problem. It is a very good feature to have in terms of enabling more real-world use cases. However, I would be cautious about getting into the business of multi-tenancy before addressing some of the fundamental requirements: Queuing and Scaling. Here are a couple of problems I faced in H1CY22 on a multi-tenant Spark cluster:

Missing queuing mechanism to handle spiky job traffic

Spark is not very good at handling “spiky traffic”. This is a big problem I have in my daily work, which requires processing thousands of Spark jobs at M365 scale. As far as I can tell, there is not a very good mechanism to smooth out the traffic of 10k parallel jobs and have them line up on the cluster. This is leading to us having to create a customized job rate control solution on top of our Spark cluster (HDInsights).

Apache Livy job submission throttling

This is yet another problem that gives me headache in H1CY22 while scaling out to processing 50K tenant’s graph embedding trainings. Our compliant clusters use Apache Livy as a proxy service to deliver job payloads into Spark cluster. I was able to get about 200-ish parallel jobs submitted in one go without taking down the cluster in H1. However, I do look forward to having this number 100x since I don’t see the point of capping the submission rate of jobs while there is plenty of resources to host more jobs.

Originally published at http://coderstan.com on July 3, 2022.