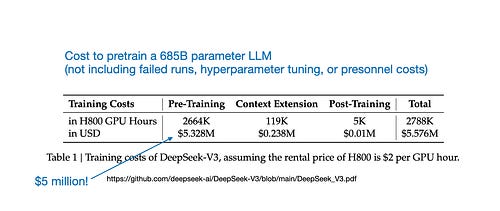

Sure, it's widely known that pretraining large language models (LLMs) is incredibly expensive, but how expensive, exactly? Back in January, I did an back-of-the-envelope calculation:

"""

A 7B Llama 2 model costs about $760,000 to pretrain!

And this assumes you get everything right from the get-go: no hyperparameter tuning, no debugging, no …

My newsletter disappeared from Google, again!

Six weeks after changing my newsletter’s domain name, The Kaitchup finally regained its spot in Google Search, ranking just as well as before.

I thought the domain transition was finally accepted by Google, and everything seemed stable. But to my surprise, it only lasted ten days!

Now, The Kai…

I’ve written 100+ notebooks for fine-tuning/running LLMs on consumer hardware. They’re a nightmare to maintain, but totally worth it—they bring many new subscribers to The Kaitchup!

Next week, I'll publish two new notebooks. One for running accurate GGUF quantization and another one explaining GuideLLM.

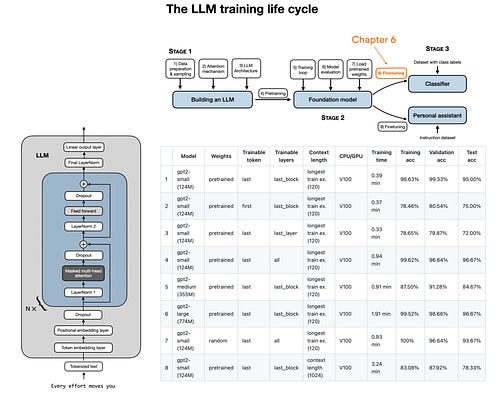

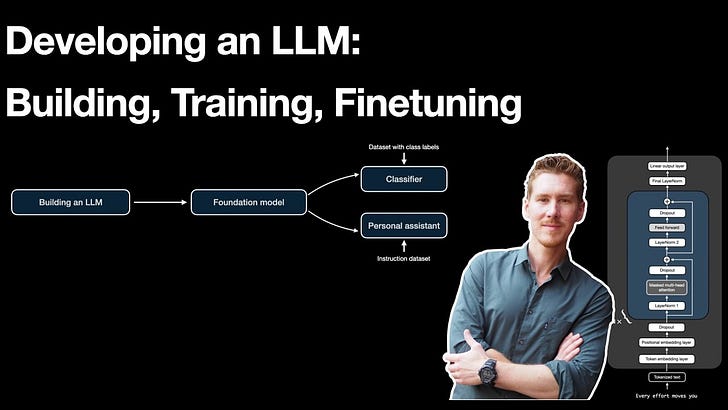

In case you are looking for something to watch this weekend, I’ve put together a 1-hour presentation that walks you through the entire development cycle of LLMs, from architecture implementation to the finetuning stages:

"Building a Large Language Model from Scratch" is in the final stages. I'm currently working on Chapter 6, finetuning a GPT-like LLM to classify SPAM messages.And while I'm polishing up the main content, I wanted to share some bonus experiments along with some interesting takeaways:

How to interpret this?

1. Traini…