Local Politics Was Already Messy. Then Came Nextdoor.

Community moderators say the social network is being exploited for political gain.

Updated at 1:15 p.m. ET on May 24, 2023

This article was featured in One Story to Read Today, a newsletter in which our editors recommend a single must-read from The Atlantic, Monday through Friday. Sign up for it here.

Kate Akyuz is a Girl Scout troop leader who drives a pale-blue Toyota Sienna minivan around her island community—a place full of Teslas and BMWs, surrounded by a large freshwater lake that marks Seattle’s eastern edge. She works for the county government on flood safety and salmon-habitat restoration. But two years ago, she made her first foray into local politics, declaring her candidacy for Mercer Island City Council Position No. 6. Soon after, Akyuz became the unlikely target of what appears to have been a misinformation campaign meant to influence the election.

At the time, residents of major cities all along the West Coast, including Seattle, were expressing concern and anger over an ongoing homelessness crisis that local leaders are still struggling to address. Mercer Island is one of the most expensive places to live in America—the estate of Paul Allen, a Microsoft co-founder, sold a waterfront mansion and other properties for $67 million last year—and its public spaces are generally pristine. The population is nearly 70 percent white, the median household income is $170,000, and fears of Seattle-style problems run deep. In February 2021, the island’s city council voted to ban camping on sidewalks and prohibit sleeping overnight in vehicles.

Akyuz, a Democrat, had opposed this vote; she wanted any action against camping to be coupled with better addiction treatment and mental-health services on Mercer Island. After she launched her novice candidacy, a well-known council incumbent, Lisa Anderl, decided to switch seats to run against her, presenting the island with a sharp contrast on the fall ballot. Anderl was pro–camping ban. In a three-way primary-election contest meant to winnow the field down to two general-election candidates, Akyuz ended up ahead of Anderl by 471 votes, with the third candidate trailing far behind both of them.

“That’s when the misinformation exploded,” Akyuz told me.

There is no television station devoted to Mercer Island issues, and the shrunken Mercer Island Reporter, the longtime local newspaper, is down to 1,600 paying subscribers for its print edition. Even so, the 25,000 people on this six-square-mile crescent of land remain hungry for information about their community. As elsewhere, the local media void is being filled by residents sharing information online, particularly over the platform Nextdoor, which aims to be at the center of all things hyperlocal.

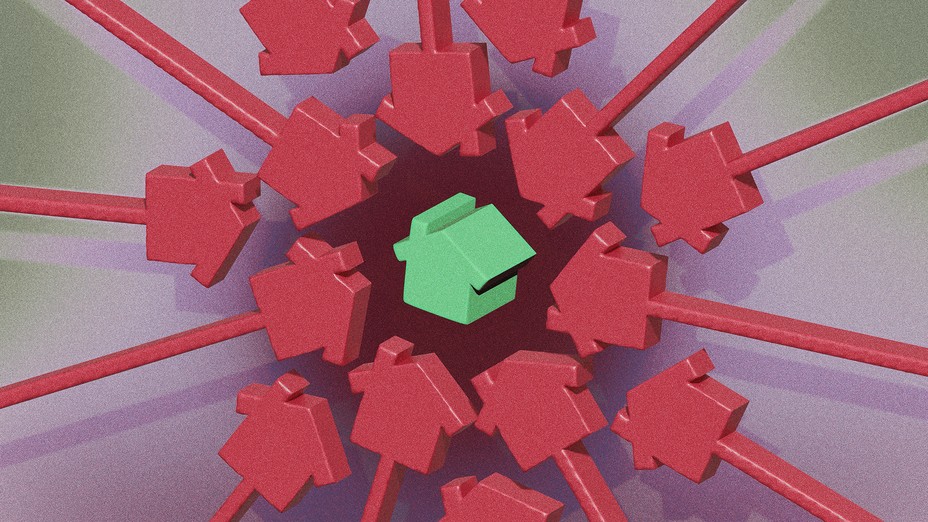

Launched in 2011, Nextdoor says it has a unique value proposition: delivering “trusted information” with a “local perspective.” It promises conversations among “real neighbors,” a very different service than that offered by platforms such as Twitter, TikTok, and Facebook. Nextdoor says it’s now used by one in three U.S. households. More than half of Mercer Island’s residents—about 15,000—use the platform. It’s where many of the island’s civic debates unfurl. During the heated 2021 city-council race between Anderl and Akyuz, residents saw Nextdoor playing an additional role: as a font of misinformation.

Anderl was accused of wanting to defund the fire department. (She had voted to study outsourcing some functions.) But Akyuz felt that she herself received far worse treatment. She was cast on Nextdoor as a troubadour for Seattle-style homeless encampments, with one Anderl donor posting that Akyuz wanted to allow encampments on school grounds. During the campaign’s final stretch, a Nextdoor post falsely stated that Akyuz had been endorsed by Seattle’s Socialist city-council member, Kshama Sawant. “Don’t let this happen on MI,” the post said. “Avoid a candidate endorsed by Sawant. Don’t vote Akyuz.”

Akyuz tried to defend herself and correct misinformation through her own Nextdoor posts and comments, only to be suspended from the platform days before the general election. (After the election, a Nextdoor representative told her the suspension had been “excessive” and rescinded it.) Akyuz believed there was a pattern: Nextdoor posts that could damage her campaign seemed to be tolerated, whereas posts that could hurt Anderl’s seemed to be quickly removed, even when they didn’t appear to violate the platform’s rules.

It was weird, and she didn’t know what to make of it. “You’re like, ‘Am I being paranoid, or is this coordinated?’” Akyuz said. “And you don’t know; you don’t know.”

Something else Akyuz didn’t know: In small communities all over the country, concerns about politically biased moderation on Nextdoor have been raised repeatedly, along with concerns about people using fake accounts on the platform.

These concerns have been posted on an internal Nextdoor forum for volunteer moderators. They were expressed in a 2021 column in Petaluma, California’s, local newspaper, the Argus-Courier, under the headline “Nextdoor Harms Local Democracy.” The company has also been accused of delivering election-related misinformation to its users. In 2020, for example, Michigan officials filed a lawsuit based on their belief that misinformation on Nextdoor sank a local ballot measure proposing a tax hike to fund police and fire services. (In that lawsuit, Nextdoor invoked its protections under Section 230, a controversial liability shield that Congress gave digital platforms 27 years ago. The case was ultimately dismissed.)

Taken together, these complaints show frustrated moderators, platform users, and local officials all struggling to find an effective venue for airing their worry that Nextdoor isn’t doing enough to stop the spread of misinformation on its platform.

One more thing Akyuz didn’t know: Two of the roughly 60 Nextdoor moderators on Mercer Island were quietly gathering evidence that an influence operation was indeed under way in the race for Mercer Island City Council Position No. 6.

“At this point, Nextdoor is actively tampering in local elections,” one of the moderators wrote in an email to Nextdoor just over a week before Election Day. “It’s awful and extraordinarily undemocratic.”

To this day, what really happened on Nextdoor during the Akyuz-Anderl race is something of a mystery, although emails from Nextdoor, along with other evidence, point toward a kind of digital astroturfing. Akyuz, who lost by a little over 1,000 votes, believes that Nextdoor’s volunteer moderators “interfered” with the election. Three local moderators who spoke with me also suspect this. Misinformation and biased moderation on Nextdoor “without a doubt” affected the outcome of the city-council election, says Washington State Representative Tana Senn, a Democrat who supported Akyuz.

Anderl, for her part, said she has no way of knowing whether there was biased moderation on Nextdoor aimed at helping her campaign, but she rejects the idea that it could have altered the outcome of the election. “Nextdoor does not move the needle on a thousand people,” she said.

Of course, the entity with the greatest insight into what truly occurred is Nextdoor. In response to a list of questions, Nextdoor said that it is “aware of the case mentioned” but that it does not comment on individual cases as a matter of policy.

None of this sat right with me. No, it wasn’t a presidential election—okay, it wasn’t even a mayoral election. But if Nextdoor communities across the country really are being taken over by bad actors, potentially with the power to swing elections without consequence, I wanted to know: How is it happening? One day last summer, seeking to learn more about how the interference in the Akyuz-Anderl race supposedly went down, I got in my car and drove from my home in Seattle to Mercer Island’s Aubrey Davis Park, where I was to meet one of the moderators who had noticed strange patterns in the race.

I sat down on some empty bleachers near a baseball field. The moderator sat down next to me, pulled out a laptop, and showed me a spreadsheet. (Three of the four Mercer Island moderators I spoke with requested anonymity because they hope to continue moderating for Nextdoor.)

The spreadsheet tracked a series of moderator accounts on Mercer Island that my source had found suspicious. At first, those accounts were targeting posts related to the city-council race, according to my source. My source alerted Nextdoor repeatedly and, after getting no response, eventually emailed Sarah Friar, the company’s CEO. Only then did a support manager reach out and ask for more information. The city-council election had been over for months, but my source had noticed that the same suspicious moderators were removing posts related to Black History Month. The company launched an investigation that revealed “a group of fraudsters,” according to a follow-up email from the support manager, who removed a handful of moderator accounts. But my source noticed that new suspicious moderators kept popping up for weeks, likely as replacements for the ones that were taken down. In total, about 20 Mercer Island moderator accounts were removed.

“We all know there were fake accounts,” an island resident named Daniel Thompson wrote in a long discussion thread last spring. “But what I find amazing is fake accounts could become” moderators.

Danny Glasser, a Mercer Island moderator, explained to me how the interference might have worked. Glasser worked at Microsoft for 26 years, focusing on the company’s social-networking products for more than 15 of them. He’s a neighborhood lead, the highest level of Nextdoor community moderator, and he’s “frustrated” by the seemingly inadequate vetting of moderators.

If a post is reported, Nextdoor moderators can vote “remove,” “maybe,” or “keep.” As Glasser explained: “If a post fairly quickly gets three ‘remove’ votes from moderators without getting any ‘keep’ votes, that post tends to be removed almost immediately.” His suspicion, shared by other moderators I spoke with, is that three “remove” votes without a single “keep” vote trigger a takedown action from Nextdoor’s algorithm. The vulnerability in Nextdoor’s system, he continued, is that those three votes could be coming from, for example, one biased moderator who controls two other sock-puppet moderator accounts. Or they could come from sock-puppet moderator accounts controlled by anyone.

Mercer Island moderators told me that biased moderation votes from accounts they suspected were fake occurred over and over during the Akyuz-Anderl contest. “The ones that I know about were all pro-Anderl and anti-Akyuz,” including a number of anti-Akyuz votes that were cast in the middle of the night, one moderator told me: “What are the chances that these people are all going to be sitting by their computers in the 3 a.m. hour?”

Screenshots back up the claims. They show, for example, the “endorsed by Sawant” post, which Akyuz herself reported, calling it “inaccurate and hurtful.” The moderator accounts that considered Akyuz’s complaint included four accounts that disappeared after Nextdoor’s fraudster purge.

Another example documented by the moderators involved a Nextdoor post that endorsed Akyuz and criticized Anderl. It was reported for “public shaming” and removed. All five moderators that voted to take the post down (including two of the same accounts that had previously voted to keep the false “endorsed by Sawant” post) disappeared from Nextdoor after the fraudster purge.

Anderl, for her part, told me she has no illusions about the accuracy of Nextdoor information. “It’s too easy to get an account,” she said. She recalled that, years ago, when she first joined Nextdoor, she had to provide the company with her street address, send back a postcard mailed to her by Nextdoor, even have a neighbor vouch for her. Then, once she was in, she had to use her first and last name in any posts. “I don’t think that’s there anymore,” Anderl said, a concern that was echoed by other Mercer Island residents.

Indeed, when my editor, who lives in New York, tested this claim, he found that it was easy to sign up for Nextdoor using a fake address and a fake name—and to become a new member of Mercer Island Nextdoor while actually residing on the opposite coast. Nextdoor would not discuss how exactly it verifies users, saying only that its process is based “on trust.”

Every social platform struggles with moderation issues. Nextdoor, like Facebook and Twitter, uses algorithms to create the endless feeds of user-generated content viewed by its 42 million “weekly active users.” But the fact that its content is policed largely by 210,000 unpaid volunteers makes Nextdoor different. This volunteer-heavy approach is called community moderation.

When I looked through a private forum for Nextdoor moderators (which has since been shut down), I saw recurring questions and complaints. A moderator from Humble, Texas, griped about “bias” and “collusion” among local moderators who were allegedly working together to remove comments. Another from Portland, Oregon, said that neighborhood moderators were voting to remove posts “based on whether or not they agree with the post as opposed to if it breaks the rules.”

Nearly identical concerns have been lodged from Wakefield, Rhode Island (a moderator was voting “based on her own bias and partisan views”); Brookfield, Wisconsin (“Our area has 4 [moderators] who regularly seem to vote per personal or political bias”); and Concord, California (“There appear to be [moderators] that vote in sync on one side of the political spectrum. They take down posts that disagree with their political leanings, but leave up others that they support”).

Fake accounts are another recurring concern. From Laguna Niguel, California, under the heading “Biased Leads—Making Their Own Rules,” a moderator wrote, “ND really needs to verify identity and home address, making sure it matches and that there aren’t multiple in system.” From Knoxville, Tennessee: “We’ve seen an influx of fake accounts in our neighborhood recently.” One of the responses, from North Bend, Washington, noted that “reporting someone is a cumbersome process and often takes multiple reports before the fake profile is removed.”

In theory, a decentralized approach to content decisions could produce great results, because local moderators likely understand their community’s norms and nuances better than a bunch of hired hands. But there are drawbacks, as Shagun Jhaver, an assistant professor at Rutgers University who has studied community moderation, explained to me: “There’s a lot of power that these moderators can wield over their communities … Does this attract power-hungry individuals? Does it attract individuals who are actually interested and motivated to do community engagement? That is also an open question.”

Using volunteer moderators does cost less, and a recent paper from researchers at Northwestern University and the University of Minnesota at Twin Cities tried to place a dollar value on that savings by assessing Reddit’s volunteer moderators. It found that those unpaid moderators collectively put in 466 hours of work a day in 2020—uncompensated labor that, according to the researchers, was worth $3.4 million. A different paper, published in 2021, described dynamics like this as part of “the implicit feudalism of online communities,” and noted the fallout from an early version of the community-moderation strategy, AOL’s Community Leader Program: It ended up as the subject of a class-action lawsuit, which was settled for $15 million, and an investigation by the U.S. Department of Labor.

Technically, Nextdoor requires nothing of its unpaid moderators: no minimum hours, no mandatory training, nothing that might suggest that the relationship is employer-employee. Further emphasizing the distance between Nextdoor and its volunteer moderators, Nextdoor’s terms of service state in all caps: “WE ARE NOT RESPONSIBLE FOR THE ACTIONS TAKEN BY THESE MEMBERS.”

But if Nextdoor were to take more responsibility for its moderators, and if it paid them like employees, that “could be one way to get the best of both worlds, where you’re not exploiting individuals, but you’re still embedding individuals in communities where they can have a more special focus,” Jhaver said. He added, “I’m not aware of any platform which actually does that.”

Evelyn Douek, an assistant professor at Stanford Law School and an expert on content moderation who occasionally contributes to The Atlantic, told me that what happened in the Akyuz-Anderl race was “somewhat inevitable” because of Nextdoor’s moderation policies. “In this particular case, it was locals,” Douek pointed out. “But there’s no particular reason why it would need to be.” Corporations, unions, interest groups, and ideologues of all stripes have deep interest in the outcomes of local elections. “You could imagine outsiders doing exactly the same thing in other places,” Douek said.

In an indication that Nextdoor at least knows that moderation is an ongoing issue, Caty Kobe, Nextdoor’s head of community, appeared on a late-January webinar for moderators and tackled what she called “the ever-question”: What to do about politically biased moderators? Kobe’s answer was the same one she gave during a webinar in October: Report them to Nextdoor. In 2022, Nextdoor began allowing users to submit an appeal if they felt their post had been unfairly removed. Roughly 10 percent of appeals were successful last year.

Douek’s words stuck in my mind and eventually got me wondering how much effort it would take for me to become a Nextdoor moderator. At the time, the midterm elections were nearing, and Nextdoor was promoting its efforts to protect the U.S. electoral process. I’d only joined the platform a few months earlier, and my single contribution to the platform had been one comment left on another person’s post about some local flowers.

I sent a message through Nextdoor’s “Contact Us” page asking if I was eligible to become a moderator. Within a day, I’d been invited to become a review-team member in my neighborhood. “You’re in!” the email from Nextdoor said.

I was offered resources for learning about content moderation on Nextdoor, but I wasn’t required to review any of them, so I ignored them and jumped right in. The first moderation opportunity presented to me by Nextdoor: a comment about Seattle’s Socialist city-council member, Kshama Sawant. It had been reported as disrespectful for comparing her to “a malignant cancer.”

Research for this story was funded by the University of Washington’s Center for an Informed Public, using a grant from the John S. and James L. Knight Foundation.

This article previously identified Daniel Thompson as a Mercer Island Nextdoor moderator. He is not.