Comment

The app for independent voices

I refuse to be the kind of person who loses their mind over a delayed train or lets a spilled coffee set the tone for the whole day.

I want to be the one who stays composed, who sees the good even when things don’t go as planned. The kind of person who breathes through the little chaos and still finds beauty in how the day unfolds.

I want to be soft. I want to be steady.

You made it, you own it

You always own your intellectual property, mailing list, and subscriber payments. With full editorial control and no gatekeepers, you can do the work you most believe in.

Great discussions

Join the most interesting and insightful discussions.

Thank you, this was a great read.

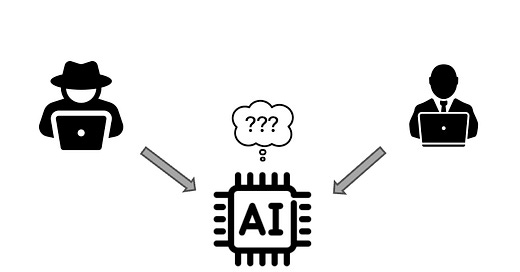

"The assumption that AI safety is a property of AI models is pervasive in the AI community."

Isn't the reason for this that a lot of AI companies claim they're on the pathway to "AGI", and AGI is understood to be human-level intelligence (at least), which translates, in the minds of most people, as human level understanding of context, and thus, human level of responsibility. It's hard to say a model is as smart as a human, but not so smart that it cannot discern to what purpose another human is using it.

Put another way, many (though not all) AI companies want you to see their models to be as capable of humans, able to do the tasks humans can, at human or near-human levels, only automated, at broader scale, and without the pesky demands human employees make.

Acknowledging that AI models cannot be held responsible for their risky uses puts them in their appropriate place: as a new form of computing with great promise and interesting use cases, but nowhere close to replacing humans, or equaling the functions humans play in thinking through and mitigating risk when performing the kinds of tasks AI may be used for.

But that runs contrary to the AGI in a few years/singularity narrative, so safety is facto expected to be an aspect of the intelligence of Artificial Intelligence models. The snake oil salesmen are being asked to drink their own concoctions. Hopefully, at some point, they'll be forced to acknowledge reality.