Comment

The app for independent voices

A friend of mine just died. He was a great guitar player. A lifetime of drinking and a zillion different drugs. He was forcibly recruited to be a child soldier in the Iran-Iraq war when he was 13. At that age, working as a sharpshooter he had to kill kids slightly older then himself. Another kid who was a friend of his lacked the same skills as a sharpshooter so he was ordered to go blow himself up to take out an enemy tank. My friend saw that, and a thousand other horrors. I think he died back…

It's easy to see two negotiating powerhouses like ESPN and Endeavor in a dirty mudslinging contest during media rights hardball meetings. I get it. And I also get that there likely be some sort of new arrangement (TKO Boxing?) or settlement to let by-gones be by-gones.

But something doesn't feel right here. If you had asked me two or mont…

Hybrid Shoot author Zack Heydorn, who wrote a book about Austin, finally got to sit down with the man himself. I’m sure that was a big moment!

You made it, you own it

You always own your intellectual property, mailing list, and subscriber payments. With full editorial control and no gatekeepers, you can do the work you most believe in.

Great discussions

Join the most interesting and insightful discussions.

This is, and I cannot stress this enough, the worst thing Substack could do. The whole POINT of Substack was to get writers away from the advertising revenue model that has completely destroyed journalism. And while I think there are perhaps better ways of packaging subscriptions (Substack magazines/sub bundles should be a more regular t…

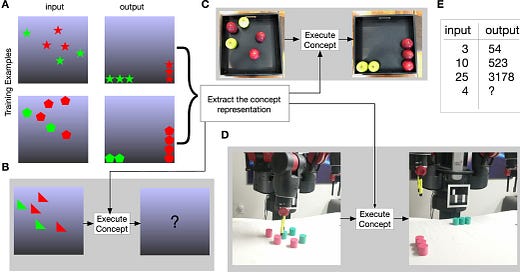

My intuition is real brains perform massive parallel of a search algorithm, applying known/learned "motions", not just prediction or pattern matching as usually assumed.

Like (mini?)columns are an army of simpletons each attempting to do its own unique stupid, simple thing.

PS more precisely by search I mean a path search between a source and a target state. Source(state0)->action1->state1-action2->state2->... actionN->target state.

Sure, an intuition is as far fetched as it gets.

Regarding the question, let's call the million simpletons "agents".

I would experiment with a global big state (think of million size activation vector ) projecting into each local agent simplified view of the global state.

And agent's response (action) is projected back into changing the global state.

If they-re all under an "animal" virtual skull, then some of the global state generates motions, e.g. camera/wheel movement and sensory inputs project into the global state too.

Sparsity is assumed, both for global state encoding and for the subset of active agents at any given time, mostly for scaling/cost reasons.

Some global reward feedback which also projects into recent past local actions is needed too.