The app for independent voices

Why I Read This

After studying the historical evolution of hardware (communications, fiber optics, sensors), I became curious about where AI and hardware truly intersect

Living in San Francisco during the AI boom intensified the question: What role does hardware play beneath the AI narrative?

Core Perspective

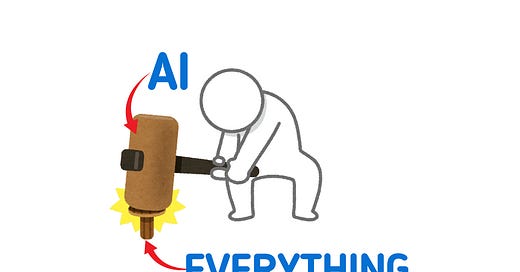

Do not invent problems just to apply AI

The correct order is: problem → method → tool

AI is not always the answer, and LLMs are not universally appropriate

AI as a Structural Shift (Not a Trend)

Unlike past “hot” technologies (5G, 6G), AI reshapes the entire hardware stack

Power, memory, interconnects, cooling, and packaging are now AI-driven constraints

AI will persist beyond hype cycles, like the internet after the dot-com bubble

Why AI/ML Matters for Hardware Engineers

Hardware expertise relies heavily on undocumented, tacit knowledge

It is more effective for hardware experts to learn ML than the reverse

ML experience is already becoming a hiring differentiator

The Roadmap (Conceptual, Not a Checklist)

1. Understand Transformers Qualitatively

Focus on intuition, not equations

Know what LLMs can and cannot do

2. Understand the Full AI Hardware Stack

Not expert-level depth, but explainable at a casual level

Start from simple questions (HBM, interconnects, power delivery)

3. Learn by Touching the Tools

Start with Python

Move from scikit-learn to PyTorch

Run open-source models locally to build real understanding

4. Use AI as a Learning Partner

Avoid obsession with prompt tricks

Treat AI as a patient explainer and guide, not a magic solution