The app for independent voices

DeepSeek just beat OpenAI’s o1 on many reasoning benchmarks.

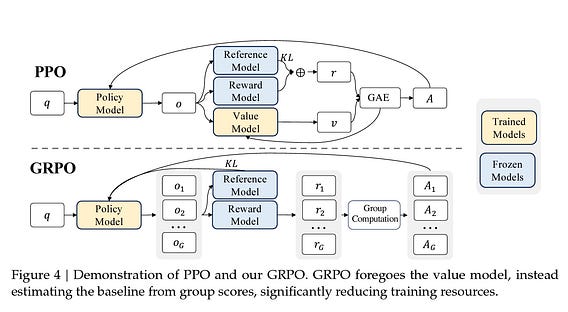

A secret sauce to their approach is training their LLM with GRPO (Group Relative Policy Optimization (GRPO), an efficient and effective reinforcement learning algorithm.

GRPO foregoes the critic model, instead estimating the baseline from group scores, and significantly reduces training resources compared to Proximal Policy Optimization (PPO).

Surprisingly, this algorithm was developed by DeepSeek engineers as well.

I can see a similar trajectory to OpenAI here, which developed Proximal Policy Optimization (PPO) in 2017 and changed how RL worked!

Jan 21

at

1:37 PM

Log in or sign up

Join the most interesting and insightful discussions.