The app for independent voices

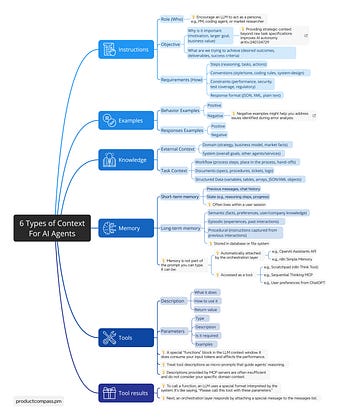

Everyone in AI is talking about Context Engineering.

But just a few explain what the context is.

Save this template. It captures all scenarios and will help you maximize agents' performance:

1. Instructions

Define:

→ Who: Encourage an LLM to act as a persona

→ Why is it important (motivation, larger goal, business value)

→ What are we trying to achieve (desired outcomes, deliverables, success criteria)

💡Providing strategic context beyond raw task specification improves AI autonomy arXiv:2401.04729

2. Requirements (How)

Define:

→ Steps to take (reasoning, tasks, actions)

→ Conventions (style/tone, coding rules, system-design)

→ Constraints (performance, security, test coverage, regulatory)

→ Response format (JSON, XML, plain text)

→ Examples (positive/negative, responses/behaviors)

💡Negative examples might help you address issues identified during error analysis

3. Knowledge

Define:

→ External Context:

- Domain (strategy, business model, market facts)

- System (overall goals, other agents/services)

→ Task Context:

- Workflow (process steps, process, hand‑offs)

- Documents (specs, procedures, tickets, logs)

- Structured Data (variables, tables, arrays, JSON/XML)

4. Memory

An LLM can access:

→ Short-term memory

- Previous messages, chat history

- State (e.g., reasoning steps, progress)

→ Long-term memory

- Semantic (facts, preferences, user knowledge)

- Episodic (experiences, past interactions)

- Procedural (instructions from previous interactions)

💡Memory is not part of the prompt you can type. It can be automatically attached by the orchestration layer or accessed as a tool.

5. Tools

Provide description, what it does, how to use it, return value, parameters.

💡It's special “functions” block in the LLM context window. It does consume your input tokens and affect the performance.

💡Treat tool descriptions as micro-prompts that guide agents’ reasoning.

💡Descriptions provided by MCP servers are often insufficient and do not consider your specific domain context.

6. Tool results

💡To call a function, an LLM uses a special format interpreted by the system. It’s like saying, “Please call this tool with these parameters."

💡Next, an orchestration layer responds by attaching a special message to the messages list.

—

Free examples (GitHub):

PM Agent Context: github.com/phuryn/examp…

Bug Fixing Agent Context: github.com/phuryn/examp…

—

Find this helpful?

You might like my Ultimate Guide to Context Engineering: productcompass.pm/p/con…

I also recommend the AI PM Certification. It’s a 6-week cohort taught by Miqdad Jaffer (Product Lead at OpenAI). The next session: Sep 15, 2025. As special $500 discount for our community: bit.ly/aipmcohort