The app for independent voices

Two months ago, this MIT study caused alarm with headlines like: ”ChatGPT Is Destroying Our Brains," "AI Is Lowering Your IQ," "We're All Getting Dumber”.

I finally got around to taking a deeper look at the paper (which is an impressive 200 pages long with 92 figures)! Learned that buried in the actual study was finding that most of the coverage overlooked.

Here’s my understanding of the study:

(1) The researchers split 54 university students into groups and had them write essays under different conditions: some used only ChatGPT, some used only search (Google), and some used just their brains. They used some funky machines to assess “cognitive load” during essay writing.

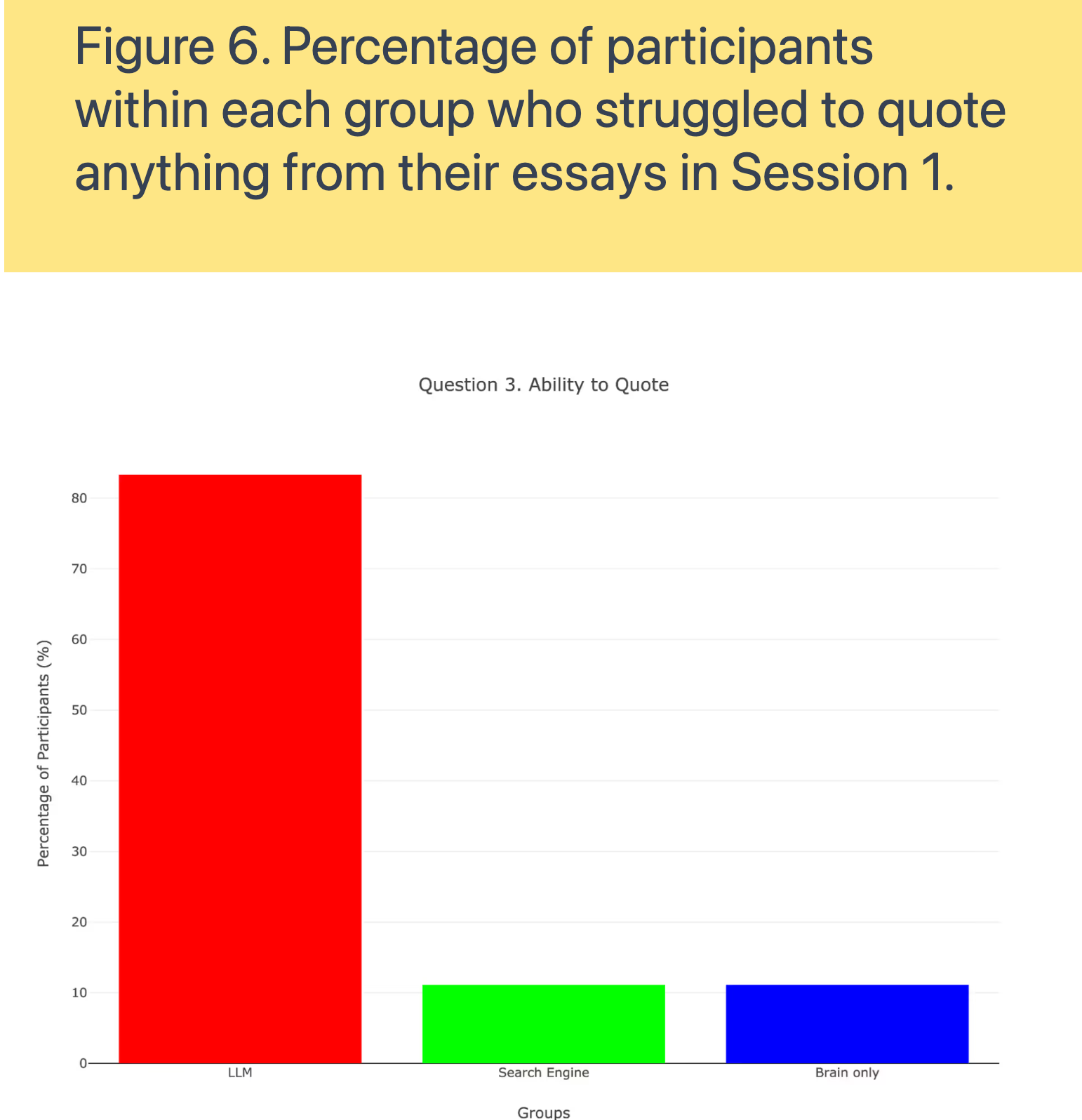

(2) As expected, the ChatGPT group showed the lowest neural activity on EEG scans and had terrible recall. 83% couldn't remember what they'd written, compared to just 11% in the other groups. (See first graph/figure 6)

Seems like case closed. AI makes you cognitively lazy. But not quite.

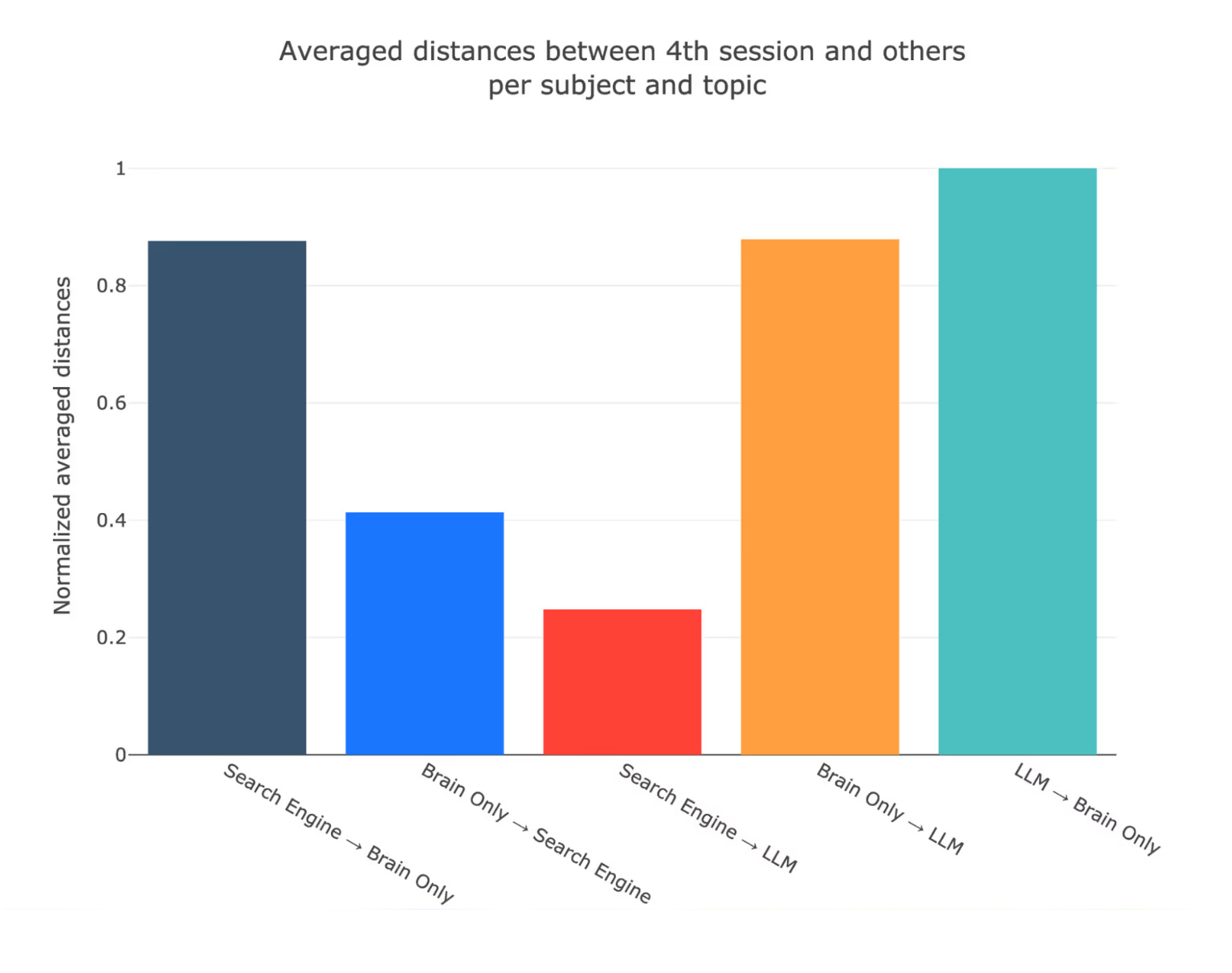

(3) The researchers also tested something else: what happens when you sequence your AI use differently. They had some participants think first, then use AI (Brain → AI), while others used AI first, then switched to thinking (AI → Brain).

(4) Here's the bit that seems to get snowed under the alarmist headlines:

The Brain → AI group maintained higher cognitive engagement even while using AI. Their EEG readings showed increased neural activity across all frequency bands. Better attention, planning, and memory processing than people who used AI from the start.

Meanwhile, the AI → Brain group never fully recovered their cognitive engagement, even when they stopped using AI.

(6) The key takeaway: How you enter the AI interaction determines whether it amplifies your thinking or replaces it.

This is the difference between thinking by AI vs thinking with AI.

When you use AI as a cognitive enhancement tool, something that challenges and amplifies your own thinking, it can make you sharper.

When you use it as a cognitive replacement tool, something that thinks instead of you, it makes you duller.

This matches exactly what I've been experiencing. There’ve been too many moments when I catch myself reaching for AI as a cognitive crutch, using it to skip past the productive struggle of working through complex problems on my own. It takes skill and patience to use AI like a cognitive enhancement tool, something that challenges and amplifies your thinking.

A surface reading of the study might make us think thinking and LLM use are at odds. But I don’t think that’s true. There’s an art to prompting LLMs in a way that make you think.

E.g. The recall prompt: “Before you give me any suggestions or analysis, I need to demonstrate my current understanding. Ask me to recall and explain my main thesis, supporting evidence, and biggest concerns from memory. Don't provide any new input until I've successfully articulated these three things. If my recall is incomplete, point out what's missing and ask me to try again."

(7) Finally, it’s worth noting this caveat from the authors on “as of June 2025, when the first paper related to the project, was uploaded to Arxiv, the preprint service, it has not yet been peer-reviewed, thus all the conclusions are to be treated with caution and as preliminary.” (Also, check out brainonllm.com, the website that the authors put together)

Thoughts? What have your experiences been like?