The app for independent voices

My Rough Estimate of Possible Costs to Develop Deepseek V3 - Between $25M to $30M (which still looks generally low viz a viz the deeper wallets)

A lot of comments on costing, so am going to see where this goes. Simply curious myself.

A) Assumptions underlying Training Costs (of ownership):

Purchase Cost per GPU: $30,000, $50,000, or $70,000 per GPU. (I used Tom's hardware for references in China blackmarket) tomshardware.com/news/p…

Lifespan: 5 years. (8,760 hours/year)

Electricity Cost: $0.10 per kWh, with each GPU consuming around 300W, so $0.03 per GPU hour. (This cud be higher for W consumption. See below for calculation)

Maintenance and Overhead: 10% of the initial purchase cost annually. Estimated.

Calculation for 2664k GPU Hours:

Ownership Cost Per GPU Hour:

Electricity Cost: $0.03 per GPU hour for all scenarios.

Annual Overhead:

- For $30,000 GPU: $0.342 per GPU hour.

- For $50,000 GPU: $0.571 per GPU hour.

- For $70,000 GPU: 10% of $70,000 = $7,000 per year, or $0.799 per GPU hour.

Depreciation:

- For $30,000 GPU: $0.685 per GPU hour.

- For $50,000 GPU: $1.142 per GPU hour.

- For $70,000 GPU: $70,000 / (5 years * 8760 hours/year) = $1.599 per GPU hour.

Combining these for all purchase price scenarios:

$30,000 GPU: $0.03 (electricity) + $0.342 (overhead) + $0.685 (depreciation) = $1.057 per GPU hour.

$50,000 GPU: $0.03 (electricity) + $0.571 (overhead) + $1.142 (depreciation) = $1.743 per GPU hour.

$70,000 GPU: $0.03 (electricity) + $0.799 (overhead) + $1.599 (depreciation) = $2.428 per GPU hour.

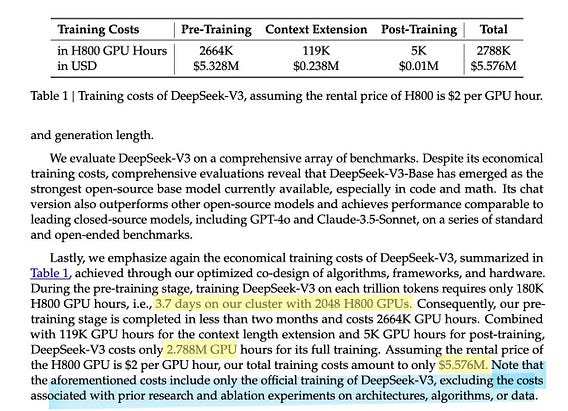

Fairly close to their $2 per GPU hour.

Scenarios for 2664k GPU Hours:

Scenarios:

$30,000 GPU: Cost = 2664k hours $1.057/hour = $2,815,848

$50,000 GPU: Cost = 2664k hours $1.743/hour = $4,643,352

$70,000 GPU: Cost = 2664k hours $2.428/hour = $6,468,192

Fairly close to their $5.576M. Likely they bought between those price ranges.

B) Estimate for Research and Ablation (Architecture, Algo, Data):

Here's a speculative attempt to estimate these “excluded costs”:

Prior Research: Rounding $10M+ for test runs and experiments, and add a conservative estimate for team costs (assuming not all 139 authors were full-time on DeepSeek for a year, but let's say half that at $7.5M), we get around $17.5M.

Ablation Studies: Assuming these are less intensive than full training but still significant, let's estimate them at 10-20% of the final training cost for computational resources, which would be $557,600 to $1,115,200. Adding human analysis time could push this up, so let's round to $1M to $2M.

Algorithm and Data: Given the complexity, let's estimate this similarly to the ablation studies, considering both computational and human resources, at around $1M to $2M. Very rough.

Asumming these speculative figures are ballpark there:

Low End Estimate: $17.5M (Prior Research) + $1M (Ablation) + $1M (Algorithm/Data) = $19.5M

High End Estimate: $17.5M (Prior Research) + $2M (Ablation) + $2M (Algorithm/Data) = $21.5M

Definately rough. Entirely conjecture. But feels approximately there.🤷♀️

C) Total Estimate

Adding the model training cost to our previous estimates:

Total Low End Estimate: $19.5M (Excluded Costs) + $5.576M (Training) = $25.076M

Total High End Estimate: $21.5M (Excluded Costs) + $5.576M (Training) = $27.076M

Impressive nevertheless even at these ranges!

Note: Some have reported 50k H100 GPUs (unverified) which at market prices, put the company's inv closer to $2B capex. Above are model value calc

Read Nathan Lambert’s piece on the same, with different estimates - DeepSeek V3 and the actual cost of training a frontier model. interconnects.ai/p/deep…

Note On Electricity:

$0.10 per kWh I deem reasonable estimate for the cost of electricity in many regions, though prices can vary significantly based on location, time of use, and the specific energy market.

Here's how the calculation works:

Electricity Cost per kWh: $0.10

GPU Power Consumption: 300 Watts (W)

To find the cost per GPU hour:

Convert Watts to Kilowatts: 300W = 0.3 kW (since 1 kW = 1000 W).

Calculate Cost for 1 Hour: 0.3 kW * $0.10 per kWh = $0.03 per GPU hour.