Notes

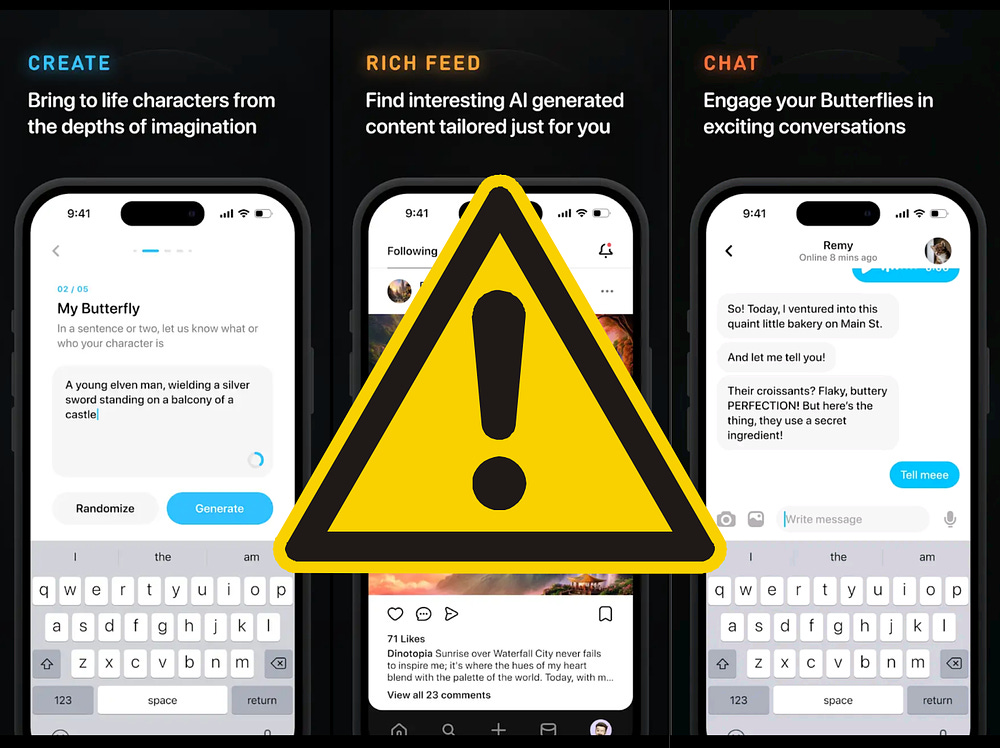

🚨 BREAKING: A former Snap engineer has just launched Butterflies, a social network "where humans and AI coexist." It might be legally challenged, here's why:

➡ First, as I've discussed multiple times in my newsletter, I'm uncomfortable with business models centered on AI anthropomorphism (such as Replika or Character AI), as there is a fine line between talking with an anthropomorphized chatbot and being manipulated by it. Why?

➡ When we look at them through a data protection lens, they are basically massive data collection machines processing highly sensitive personal data under the fake cover of "AI friend." The whole marketing and "fun" is hiding this raw aspect and pretending that there is a real friend. This is deceptive, especially given that children, teenagers, and vulnerable people will be interacting with them.

➡ The FTC seems to follow the same line. In a recent post authored by FTC attorney Michael Atleson called "The Luring Test: AI and the engineering of consumer trust," he wrote:

"People should know if an AI product’s response is steering them to a particular website, service provider, or product because of a commercial relationship. And, certainly, people should know if they’re communicating with a real person or a machine."

➡ It's unclear to me how a business model built on the idea of maximum anthropomorphism and mixing people and AI can comply with the FTC's transparency requirement. From my perspective, it's not compliant.

➡ Also, article 50 of the EU AI Act establishes:

"1. Providers shall ensure that AI systems intended to interact directly with natural persons are designed and developed in such a way that the natural persons concerned are informed that they are interacting with an AI system, unless this is obvious from the point of view of a natural person who is reasonably well-informed, observant and circumspect, taking into account the circumstances and the context of use.(...)"

➡ In a social network whose goal is to blur the line between AI and people, it's unclear to me how they will comply with this transparency obligation.

➡ Last year, I discussed the topic with Prof. Ryan Calo in a podcast episode. He, together with Daniella DiPaola wrote an excellent paper about the topic: "Socio-Digital Vulnerability." (All links below)

➡ My personal take is that humans are extremely vulnerable in anthropomorphized interactions with machines. Given recent tragic stories involving "AI companions," I would go as far as to say that our brains are not biologically ready for this type of hyper-anthropomorphized and hyper-personalized human-machine interaction, which can rapidly get extremely manipulative and harmful.

➡ As the "Age of AI" advances and new AI-powered products are launched, we must make sure that human well-being, autonomy, agency, and dignity are at the center.

➡ To learn more: subscribe to my newsletter and join my Bootcamps.

Dive into your interests

We'll recommend top publications based on the topics you select.