The app for independent voices

Anyone under the impression that ChatGPT "thinks" is either delusional or unfamiliar with actual thinking (in most cases the latter).

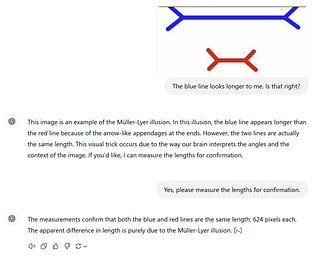

LLMs are useful for a handful of activities like coding, doing basic calculations, writing doggerel, generating cartoonish images, churning out fake news, and conducting searches. They have zero creativity, ability to deal with genuinely novel problems, or understanding of what they pontificate about, as can be confirmed by anyone with a ChatGPT or Grok account. (See the screenshot of my conversation with ChatGPT where it fails to understand the concept of longer vs. shorter lines.) Training LLMs on more data (which increasingly consists of bot-generated bullshit, though that's immaterial) isn't going to usher in some history-altering singularity.

AI enthusiasts—including a few people who I respect intellectually—will say that in the past LLMs performed feats that skeptics said would be impossible, and there will be egg on my face when ChatGPT replaces me as a philosopher before turning us all into paperclips. But I don't think so. After being trained on 40 gazillion words, ChatGPT hasn't produced a single joke that's funny, or an original philosophical idea, or one sentence that doesn't sound like it was written by a glorified autocomplete (what ChatGPT is).

Many people are spooked by ChatGPT's ability to write an essay of the form "X is an interesting topic. Some people say A about X, but others say B. In conclusion, a good case can be made for A." The chatbot writes this kind of thing better than most people can, so it might feel like the machine has achieved human-level intelligence. But there is a qualitative difference between writing summaries of text in the style of a college term paper and writing something that's new and interesting. Most people write by following the same principles of ChatGPT: they respond to a prompt by shuffling around clichés and phrases (data) that they picked up from their environment. It's not surprising that computers can beat us at that game. But they're not beating us at what matters, namely, adding intellectual value.

Science tells us nothing about what what happens in the magical moment when the mind grasps an idea or comes up with something new. Maybe one day we will figure out how to instantiate that process in silicon. That day is not now.

Greg Isenberg shared a story about his lunch with a 22-year-old Stanford grad who was struggling to finish sentences, grasping for basic words. The zoomer explained: "Sometimes I forget words now. I'm so used to having ChatGPT complete my thoughts that when it's not there, my brain feels...slower" (x.com/gregisenberg/stat…). Most people would balk at the idea of outsourcing their thinking to this extent to a human guru—even one who is wise and cares about their well-being. But for some reason we are okay with merging our minds with a trippy chatbot that can't and never will understand what it means for a line to be long or short.