The app for independent voices

Some people claim that AI doesn't hurt people - that its use cases aren't taking real work from humans, but this argument is disingenuous.

AI was always going to take work from people, but we like a good story, the one where it's just harmless animated cat videos all the way down. I use a few AI tools. They’re useful. But we need to talk about the hidden cost (especially for young creatives, coders, and grads trying to break in).

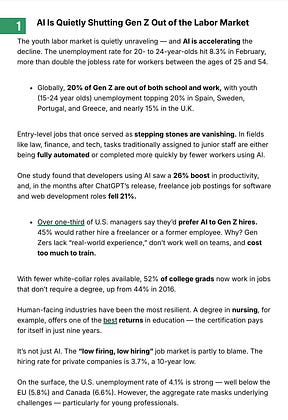

For example: In the author world, we’ve long criticised publishers for only promoting top talent and shutting the door on new voices. The screenshot from ProfG Markets shows something similar happening everywhere because of AI. Decision-makers are avoiding risk, chasing cost-cutting, and quietly sand-blasting the stepping stones out of existence that once led people into their careers.

Entry-level roles aren’t evolving. They’re disappearing, and many are being replaced by AI models trained on pirated content and optimised to perform just well enough to cut humans out of the loop. There’s rage among creatives who’ve had their work stolen to feed these machines (itsa me!), but there’s a deeper layer: our work is being used to dismantle other people’s lives.

This isn’t just a consent issue. It’s about complicity. The stuff we made to lift others up is being retooled to keep them out.

I use AI to assist, not replace. It helps me stay sharp, not skip the hard parts. There’s a big difference between augmenting creativity and automating it out of existence. Pandora's Box is well and truly open on this. We're going to have to live with what's been done. The commercial interests are too massive, and the techbros too entrenched, for the toothpaste to go back in the tube.

But what if we told different stories?

Imagine if Microsoft, one of the richest companies on Earth, instead of laying off 10,000+ people, chose not to. Imagine they expanded their grad programme and created a future for humanity alongside their AI tools. That’s a headline worth writing. But it won’t fly with Wall Street, will it?

Next time you spend money with a company that makes AI tools, consider:

It’s not just what you buy.

It’s who you’re supporting.

And what they’re building with that support.

This might sound reductive, but it still cuts to the issue: Where’s the line? Using AI ethically means being mindful of who’s getting left behind. The goal shouldn’t be to replace everyone. It should be to build smarter, more sustainable systems that not just let people in, but make them the best they can be.

Imagine if AI’s arrival came with a promise to build a better humanity, not replace it.

Wall Street said no.

They don’t have to be the final arbiter.