The app for independent voices

Finally, the paper for Qwen2.5-Omni is out!

Qwen2.5-Omni is arguably the first truly integrated multimodal model that both hears and speaks. And it does so while thinking.

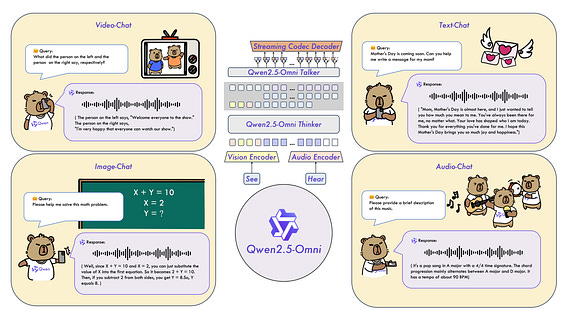

The researchers achieved this through their novel "Thinker-Talker" architecture - where Thinker processes multimodal inputs and generates text, while Talker produces natural speech based on the Thinker's representations.

Sometimes the whole is greater than the sum of its parts:

Qwen 2.5-Omni doesn't just combine modalities - it integrates them into a cohesive system that outperforms specialized models in audio understanding, matches state-of-the-art image understanding, and excels at speech generation.

Mar 27

at

9:59 AM

Log in or sign up

Join the most interesting and insightful discussions.