The app for independent voices

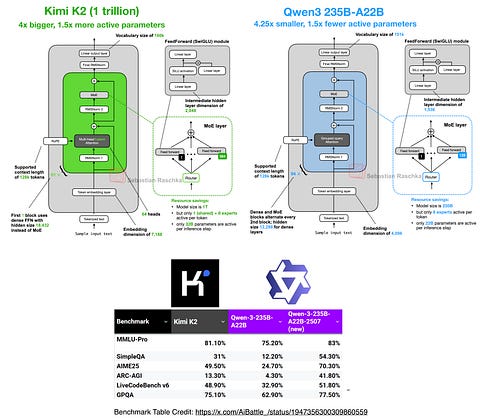

It's just been 10 days since the Kimi 2 release, and the Qwen 3 just updated their largest model to take back the benchmark crown from Kimi 2 in most categories.

Some highlights of how Qwen3 235B-A22B differs from Kimi 2:

- 4.25x smaller overall but has more layers (transformer blocks); 235B vs 1 trillion

- 1.5x fewer active parameters (22B vs of 32B)

- much fewer experts in MoE layers (128 vs 384); also, experts are slightly smaller

- doesn't use a shared expert (but otherwise also has 8 active experts)

- alternates between dense and MoE blocks in every other layer (transformer block)

- grouped query attention instead of multi-head latent attention

Jul 21

at

8:36 PM

Log in or sign up

Join the most interesting and insightful discussions.