The app for independent voices

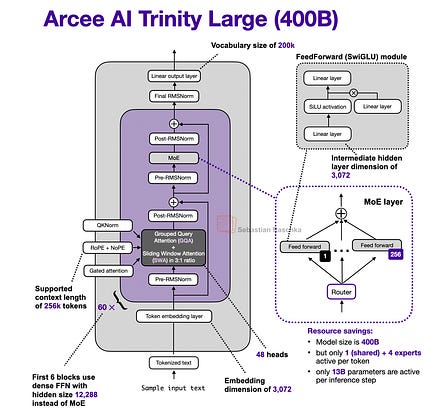

It's been a while since I did an LLM architecture post. Just stumbled upon the Arcee AI Trinity Large release + technical report released yesterday and couldn't resist:

- 400B param MoE (13B active params)

- Base model performance similar to GLM 4.5 base

- Alternating local:global (sliding window) attention layers in 3:1 ratio like Olmo 3

- QK-Norm (e.g. popular since Olmo 2) and NoPE (e.g., SMolLM3)

- Gated attention like Qwen3-Next

- Sandwich RMSNorm (kind of like Gemma 3 but depth-scaled)

- DeepSeek-like MoE with lots of small experts, but made it coarser as that helps with inference throughput (something we have also seen in Mistral 3 Large when they adopted the DeepSeek V3 architecture)

Added a slightly longe write-up to my The Big Architecture Comparison article: magazine.sebastianrasch…