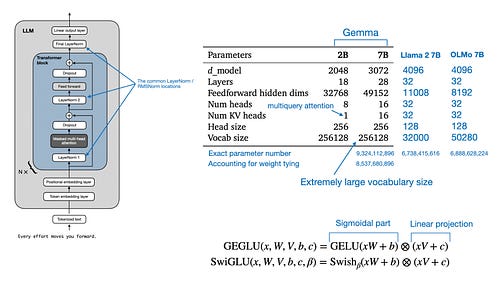

Google's Gemma has been the topic of the week for both LLM researchers and users. My colleagues and I just ported the code to LitGPT, and we discovered some interesting surprises and model architecture details along the way:

1) Gemma uses a really large vocabulary and consequently embedding weight matrices.

2) Like OLMo and GPT-2, Gemma also uses weight tying.

3) It even uses GeLU (in the form of GeGLU), similar to GPT-2, unlike Llama 2 and other LLMs.

4) Interestingly, Gemma normalizes the embeddings by the square root of the hidden layer dimension.

5) The RMSNorm layers are in the usual location, different from what was hinted at in the technical paper.

6) However, the RMSNorm implementation comes with the unit offset that wasn't mentioned in the technical paper.