The app for independent voices

How AI Companies Trick You Into Training Their Models

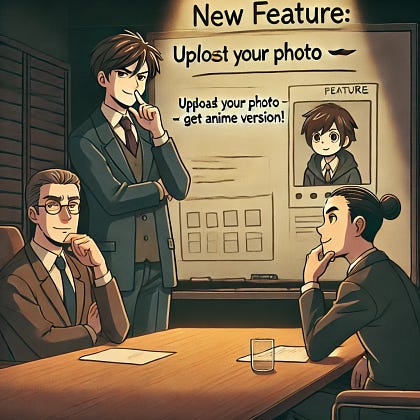

🧑💼 Exec 1: Our AI models are falling behind in facial recognition and image generation. We need more data.

👩💼 Exec 2: But getting enough high-quality images will take too long.

🤵 Exec 3: What if we launch a fun feature that encourages people to upload their own photos?

🧑💼 Exec 1: 😂 LOL. People won't fall for that anymore. They already post less personal content on social media.

🤵 Exec 3: 🤔 Hear me out. We offer a tool that transforms a personal photo into an anime version. People will love it. They’ll share the results, and we’ll get a fresh batch of faces to train our AI.

🧑💼 Exec 1: 😏 That might work… but will they really go for it?

🤵 Exec 3: ✅ They’ll think it’s just for fun. Meanwhile, we improve our dataset—for free.

🚨 Be mindful of what you upload. Your personal data is more valuable than ever as AI technology evolves. Gen AI tools like ChatGPT and Gemini are constantly seeking new ways to collecting data, often disguising data collection as entertainment.

This raises critical privacy and cybersecurity concerns.

Are we unknowingly training AI models that could later be used for facial recognition, surveillance, or other invasive applications?

Let us know what you think - do you see OpenAI's Ghibli-style image generator as clever marketing or a potential privacy trap?

Interested in private Gen AI? Check out our post here: secretsofprivacy.com/p/…