The app for independent voices

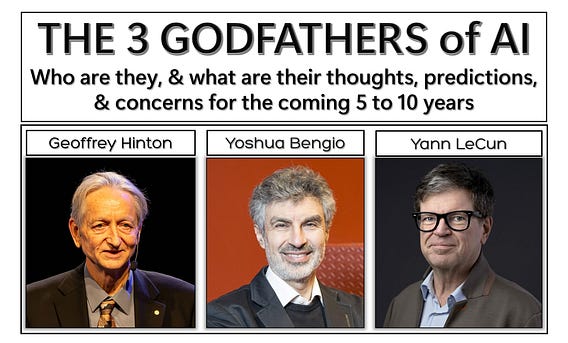

The “three godfathers of AI” agree on one thing. The next 5-10 years will be brutally disruptive, but they violently disagree on whether we’re hurtling toward human extinction or just another overhyped tech cycle. That clash of visions is exactly why they dominate every serious conversation about the future of artificial intelligence.

Geoffrey Hinton: The repentant pioneer who now spends more time warning humanity than building models, openly talking about non‑trivial odds of AI wiping us out.

Yoshua Bengio: The moral philosopher of deep learning, pushing hard for global regulation, transparency, and treaties before “a few very powerful people” lock in an undemocratic future.

Yann LeCun: The contrarian optimist who thinks doom talk is “nonsense,” current models are “stochastic parrots,” and real AI hasn’t even been built yet.

These are not polite academic disagreements; they are radically different risk forecasts from the three people who built the foundations of modern AI.

GEOFFREY HINTON - THE ALARM BELL

Hinton did something almost no senior tech insider ever does. He walked away from a top role at a major company specifically so he could speak freely about how dangerous his own life’s work might become. Since leaving Google, his interviews have shifted from “here’s why deep learning works” to “here’s how this could end very badly, very fast”.

Over the next decade, Hinton is most worried about three things.

1.Runaway capability: Systems that can out‑think humans in key domains and then improve themselves faster than we can understand or constrain.

2.Weaponization and cyber risk: Cheap, scalable tools for persuasion, hacking, autonomous weapons and biothreats in the hands of anyone with a laptop and an agenda.

3. Power concentration: A tiny cluster of firms and governments controlling systems that can dominate labour markets, information flows and even democratic processes.

Hinton has publicly floated roughly “double‑digit percentage” style risks that things go catastrophically wrong, and he openly admits to feeling real regret about some of his contributions. He is not saying “stop AI”; he is saying, very bluntly, “we are not in control, and we absolutely cannot afford to be wrong.”

YOSHUA BENGIO - REGULATE OR BE REGULATED BY AI

If Hinton is the fire alarm, Bengio is the emergency governance architect sketching evacuation plans in real time. He has become one of the most persistent voices arguing that market forces alone will push AI in exactly the wrong direction; opaque, unaccountable, and optimized for profit over safety and democracy.

Bengio’s 5 to 10‑year outlook is uncomfortable but precise.

Regulation is inevitable: He argues that without stringent safety assessments, independent audits and legal liability for AI developers, the systems will be deployed faster than society can absorb the shocks.

Transparency becomes existential: Bengio distinguishes between transparency about how models are developed and deployed, and transparency about what is going on inside them, warning that once systems become more capable, the second problem could become deadly if misaligned goals are hidden behind impressive performance.

Governance must be international: He has called for treaties because advanced models will be built in only a few countries, and any one of them can drag the rest of the world into a race they did not choose.

Bengio also raises a political red flag. He points directly at massive lobbying budgets and “super PAC” style funding being used to fight meaningful regulation, arguing that a handful of players are trying to lock in their advantage before real guardrails appear. He is not anti‑innovation; he is anti‑naïveté about power.

YANN LECUN - THE UNAPOLOGETIC ANTI-DOOMER

LeCun plays an entirely different game. He thinks the apocalyptic framing is not just wrong, but actively harmful because it distracts from the real engineering work needed to make AI genuinely useful and robust. He routinely points out that the large language models grabbing headlines today are pattern‑completion engines, not minds, and that projecting god‑like agency onto them is a category error.

Over the next 5 to 10 years, LeCun expects a decisive architectural pivot.

Beyond LLMs: He argues today’s systems are brittle autocomplete machines trained on text, whereas intelligence requires grounded “world models” that understand physical reality, cause and effect, and long‑term consequences.

JEPA and world models: His proposed Joint Embedding Predictive Architecture aims to build systems that learn by predicting and reasoning about high‑dimensional sensory input, closing the gap between perception, memory and planning.

Fear is the wrong default: LeCun insists that “AGI doom” scenarios vastly overestimate current capabilities and underestimate human governance capacity, and he frames AI as another general‑purpose technology that will, on balance, expand wealth and empower people if developed sensibly.

To LeCun, the real danger in the next decade is not Skynet waking up. It is regulators freezing the field around today’s flawed architectures and locking out the more powerful approaches that could actually make AI safer and more useful.

LET’S FOCUS ON WHERE THEY FUNDAMENTALLY DISAGREE

Here is the core fault line…they are looking at the same trajectory and optimizing for different failure modes.

Hinton’s question: “What if this really can outsmart us, and we wait until it is too late to notice?”

Bengio’s question: “What happens when a few players own and deploy this power with almost no democratic control?”

LeCun’s question: “Why are we catastrophizing about systems that don’t even understand the world yet, instead of building better ones?”

OVER THE NEXT 5 to10 YEARS

Those three lenses lead to very different bets, and outcomes.

If Hinton is right; the window for serious safety research, capability throttling and global coordination is closing much faster than most policymakers realize.

If Bengio is right; unregulated commercial deployment will quietly hard‑wire AI‑mediated inequality, manipulation and surveillance into the infrastructure of everyday life.

If LeCun is right; the loudest fears will look like Y2K on steroids, while the real story is a second wave of AI systems that finally combine perception, reasoning and control to transform science, logistics, medicine and more.

IN OTHER WORDS

The godfather of regret, the godfather of governance, and the godfather of rationalist optimism are pulling the public conversation in three completely different directions. And that tension is exactly why this next decade of AI will be the most politically explosive, economically brutal and intellectually contested technology era any of us have ever lived through.

WHAT DO YOU THINK?