The app for independent voices

OpenAI has published a paper on hallucinations titled “Why Language Models Hallucinate.” It’s a great study on why this failure mode happens and what researchers can do to fix it.

They start by making this fundamental claim: “language models hallucinate because standard training and evaluation procedures reward guessing over acknowledging uncertainty.” The idea is not new; what’s novel is their claim that you can fix hallucinations by changing how evaluation is done.

One thing I like about the paper is that the authors insist on demystifying hallucinations: They’re not unsolvable, and they’re not desirable. They just had to find the source and take the time and care to address it.

What they find is that the training objectives (during pre-training and post-training) incite the models to avoid expressing uncertainty or abstaining from answering questions: when you are rewarded only by accurate answers, taking random guesses will always be strictly better than abstaining; saying “I don’t know” is always penalized.

The authors propose to introduce penalties for incorrect answers. Again, this approach is not new, but they argue that instead of creating new hallucination-specific benchmarks or simply reducing the relevance of standard ones is not the best choice: the entire community should embrace adding penalties to existing benchmarks that everyone uses.

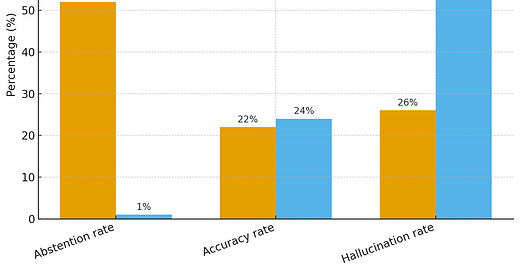

If you indirectly reward AI models when they abstain from giving answers they’re unsure about, you will see the rate of hallucinations get drastically reduced.

But is that the whole story? I argue it isn’t: out-of-distribution (OOD) cases, which occur because the distribution of the training dataset is different from the distribution over the entire range of cases one can encounter in the wild, can’t be solved by this approach. Navigating reality is, by definition, an OOD problem that requires capabilities LMs lack, like intuition, fluid intelligence, improvisation, and generalization.

It doesn’t matter how well AI learns to say “I don’t know”; It just so happens that it won’t say “I don’t know” when it doesn’t know that it doesn’t know.