The app for independent voices

One of my earliest memories: 1987, age six, asking my father what nuclear weapons were after hearing about Pershing missiles being withdrawn from Germany. He explained, then assured me the Soviets had "no money to start a nuclear war."

Today, China is adding 100+ nuclear warheads annually. Russia deploys hypersonic glide vehicles. The US races to modernize its arsenal. The Cold War frameworks that seemed to solve nuclear paradoxes have collapsed.

In 1983, philosopher Gregory Kavka posed a thought experiment: A billionaire offers you $1 million if you intend (at midnight tonight) to drink a mildly harmful toxin tomorrow at noon. You get paid based solely on your intention - you don't actually have to drink it. But can you genuinely intend to drink something when you know that tomorrow, with the money already in hand, you'll have no reason to follow through?

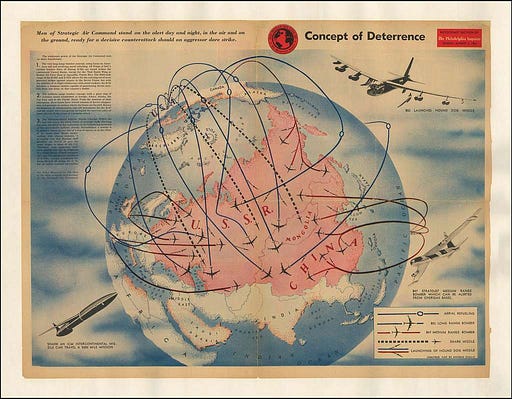

Kavka developed this puzzle while studying deterrence theory. The logic is identical: How can you credibly threaten nuclear retaliation after being destroyed, when retaliation would serve no purpose? You can't genuinely intend to do something you know will be irrational when the time comes.

The Cold War "solved" this through technology - automated systems, launch-on-warning, removing human hesitation. Dr. Strangelove's Doomsday Machine was satire, but it solved the commitment problem by eliminating human choice.

Today's AI arms race risks making this literal. When decision timelines compress to seconds, human judgment becomes a "bottleneck." The solution to rational decision-making paradoxes becomes eliminating rational decision-making entirely.

But could AI also help us understand these paradoxes? I'm exploring how reinforcement learning algorithms handle the same commitment problems that stumped Cold War strategists.

Image source: cdn2.picryl.com/photo/1…