Discover more from davidj.substack

I have recently had a series of posts featuring guest authors: “The Human Interfaces of Data”. In Abhi Sivasailam’s post in this series, he describes a Data Design Document (DDD), and shares a template:

For me, the data life-cycle begins with the Product Requirements Document owned by the relevant Product Manager, and which details feature development needs. Each PRD must include an Analytics Requirements Document that is co-created with the relevant Product Analyst(s) and which details:

Key operating metrics and KPIs that must be trackable as part of a functional release; and

Exploratory questions that the PM/Analyst anticipate themselves, or their partners are asking

Product Requirements give rise to Engineering Requirements, but, importantly, Engineering Requirements contain data requirements expressed in a Data Design Document. Features can’t be considered complete without a DDD!

With respect to data, every new feature a product team creates introduces some combination of the following side-effects:

Creates or necessitates the collection of new data

Mutates or alters old data or prior representations of data

Raises new questions of existing data

The Data Design Document is intended to thoughtfully manage these effects, up front, by defining the necessary data collection and modeling requirements.

The ARD defines what needs to be measured to track the goals of the PRD. Let’s go through an example - let’s imagine that we’re working at an e-commerce company.

One of the overarching goals of an e-commerce company could be to increase revenue. A metric that could contribute to revenue might be: conversion from product feed pages to products added to basket. Normally, a customer would click on a product from the feed, look at it in more depth and then add it to their basket if they want to buy it. A product manager could hypothesise that, by having product hover overlays, customers will convert more readily from product feed pages to products added to basket.

There are a few things you could look to measure here:

How often did visitors add to basket directly from the product hover overlay?

How often did visitors go from product overlay to the product page?

How does conversion compare to when the product hover overlay isn’t deployed (control group)?

Do visitors who see the product overlays when browsing the feed, but don’t click on them, still convert better than the control?

The questions above are by no means comprehensive - just some initial ideas - but a data model naturally emerges from them:

We already had an existing event for a feed being displayed. This now needs a feature flag (or equivalent) to show if the feed had the new product hover overlays enabled. Sometimes teams do things like splitting visitor groups by the first digit of the visitor_id. This is inferior, as it’s not explicit and relies on the visitor_id persisting for a visitor’s session (cookies).

We need a new event for the product hover overlay: it should contain all the details that the visitor saw in the overlay. This could include stock status, sizes available, colours available… It also needs to have a product_id, to link it back to the product on the feed. It would also need the feed_id to link back to the feed. A less clear choice is whether to also store the position of the product on the feed.

We also need a new event to record interactions with the overlay, whether that is configuring an item (eg choosing size/colour), adding the item to basket or clicking on it to go to the product page.

Item added to basket events and product page views need a way to link back to the product hover overlay, which, at the very least, could be its event_id and perhaps also some referrer-type field, to prevent needing to join across all possible referrer events.

As you can see, even for a modest product change like this one, thinking about changes/additions to the data model is not trivial. By being part of this discussion, we’re shaping the data model and data collected to be as optimal as we can possibly design, with the time and resource available. It won’t be perfect, but it will be purposeful and well understood. It will be aligned with the goals of our company by default.

Working in this way also makes it easy to agree a data contract, and perhaps that’s even too heavy a term here. We’ve already agreed the data model with events, entities, fields and types stored with the data producers - this is the data contract. We haven’t sat across the table from them asking for things they don’t want to give, after they’ve already decided what to change to meet the PRD - we’ve helped them design the data model and events collected.

Data contracts, whilst a good idea, are very defensive on their own - reactive. Imagine being given the questions to be answered after the PRD and engineering changes were already defined. You then have to look at the new and changed data from the engineering changes, and reverse engineer as to whether they will enable the questions to be answered. Then, define the data contract to cover the new and changed data and ask for extra requirements to be added to the schema to help answer the questions. You’re asking the engineers to do more work than the PRD requires - this is a losing battle.

Clarity as to why some questions can’t be answered is lost - the data team can appear as if they are being obstructive without this clarity. It’s very easy for it to become ‘them and us’.

The DDD and the human process of being part of the wave, rather than having to surf it (or be washed over), is superior. It also makes it much more likely for the data team to be considered a part of the profit centre making the change, rather than a reactive cost centre handling tickets. The cost of the data work is part of the investment, upon which there is return.

The technical work is similar between the two, but this way is more efficient, rewarding and likely to have good results. Less ‘them and us’ and finger-pointing about who broke the data, more a collective decision to make compromises to the data model, based on ROI.

Going with the tide, rather than against, makes a huge difference to efficacy. Everything is discussed at the right time, not rehashed afterwards when some key people delivering the PRD may not be there. Agreement of the data model is collaborative. Where you have shared context between data, product and engineering, chances are that what is delivered will be of a higher standard. It will probably result in a more logical and feature-rich data model, capable of answering more questions, too.

Harmful data contract violation becomes less likely, too. The intention of the engineers is aligned with data and therefore, although they may have implemented differently to the spec, there is less likelihood of loss.

As the questions and associated measures are considered with the data model, the changes or addition to a semantic layer become very clear. They can be defined up front, rather than reverse engineered by the data team afterwards. This is cheaper, faster and more likely to be correct.

Context is not inherently shared through a data contract. I defined quite an extended spec for a data contract here, that would share a lot of context, but it’s not the norm. Engineers don’t understand how the data might be used by consumers from the contract, or what questions need to be answered from the data. When APIs are delivered as products, engineers understand what, at least the initial consumers of the API, are going to use it for. With data contracts, the data consumers aren’t the primary consumers of the delivered product change, so engineers don’t necessarily know how the data will be consumed. Yes, we might think about data contracts as “just another API”, but this time the API is a by-product rather than the product.

Data contracts don't share context about the data model, changes to it and reasons with the data team. As I’ve mentioned above, the data team ends up having to reverse engineer lots of things because they don’t have context. Context isn’t essential in order for a data contract to work, but it massively improves efficiency, efficacy and collaboration, while reducing risk

When interviewing Abhi in the post above, I asked at least twice, in different ways, about how we get this way of working into action. I thought at the time that it would be difficult to get data folks in the room. Having considered the benefits vs doing data contracts alone, I actually think it will be easier than I anticipated to sell this way of working.

Trying to enforce data contracts without DDD and not being “part of the wave” is adding work to a stretched engineering team. This will most likely fail and also result in the data team being reactive and seen as obstructive. Being part of the team delivering the change delivers value as part of the product development workflow. It’s not clear that any additional work is put on the engineering team. The additional context for them in the planning stage may indeed reduce work or make it easier to know what to do. So, the business case for working in this way is clear:

probably greater efficiency with engineering

certainly greater efficiency with data headcount

greater speed of delivery for data requirements

data headcount spend is more attributable to profit centre

probably greater speed of delivery for product changes in general, as there is less back and forth

higher likelihood of being able to answer questions and measure KPIs

higher likelihood of data model being adaptable for further product changes

lower likelihood of data outage

no obvious additional cost from operating this way

If I was selling this to a CFO and went down the list, they’d say: “You had me at greater efficiency with data headcount, but I’ll take the rest too.”

I was wrong, this shouldn’t be a hard sell at all. Some of you will say it’s obvious we should work this way and that doing data contracts without being part of the product development process is the tail wagging the dog. However, speaking to a number of practitioners, who at least on a subconscious level know this to be true, they actually still have applying data contracts as the goal. Being part of the process to deliver company goals is the way, and data contracts are a natural output of this.

Some data teams are under stress from constant product changes, causing them downstream issues. It could be a natural inclination to take a defensive position, but let's join the offense instead.

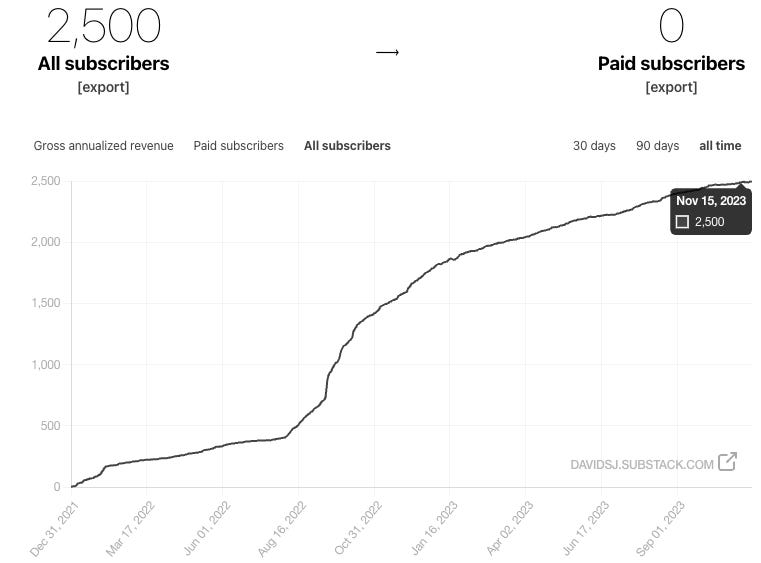

Today marks my 100th post in this Substack. I have not missed a week in the last 100. I’ve posted from various locations, on holiday, at conferences and even from two different hospitals! This might sound crazy, but I’ve enjoyed it.

Whilst everything else around me has changed hugely and a number of times, delivering something in this blog every Wednesday has been a constant. There was no war in Ukraine or Israel when I started this blog. I was a data practitioner, looking to move into another similar role, when I started this blog. I wasn’t confident enough to be a founder when I started this blog. I wasn’t confident enough to speak to a room of hundreds of people when I started this blog. I didn’t have the wonderful global network of friends and collaborators that I have now, when I started this blog.

Thanks for sticking with me for so long!

I’ve loosely tried to deliver around 1500 words every week (I say ‘loosely’ because there have been times when I’ve massively exceeded this, especially when I’ve interviewed someone). I may experiment with format and cadence going forward - maybe shorter and more often, maybe longer and less often, maybe audio/video… I haven’t decided and I may not even change it any time soon. I’m open to ideas, let me know what you’d like to see or find helpful.

Big congrats on post 100! That's a huge accomplishment!