Discover more from AI Disruption

Today's Open Source (2024-09-13): XVERSE-MoE-A36B, China's Largest Open-Source MoE Model

Discover top AI open-source projects like XVERSE-MoE-A36B, OpenAI-o1, Agent Workflow Memory, and more. Explore advanced language models and tools.

Here are some interesting AI open-source models and frameworks I wanted to share today:

Project: XVERSE-MoE-A36B

XVERSE-MoE-A36B is a large language model developed by Shenzhen Xverse Technology. It supports multiple languages using the Mixture of Expert (MoE) architecture.

With a total of 255.4 billion parameters, it activates 36 billion parameters during use.

It uses a 4D topology to balance communication, memory, and computing resources.

Trained on vast amounts of high-quality data, it supports over 40 languages, excelling particularly in Chinese and English.

https://huggingface.co/xverse/XVERSE-MoE-A4.2B

https://github.com/xverse-ai/XVERSE-MoE-A36B

Project: OpenAI-o1

The OpenAI o1 series are next-gen language models, trained with reinforcement learning to handle complex reasoning tasks.

Before responding, o1 models think through the problem, generating a detailed internal thought process.

They excel in scientific reasoning, ranking in the 89th percentile on Codeforces programming challenges, placing among the top 500 in the American Mathematics Olympiad, and outperforming PhD students in physics, biology, and chemistry benchmarks (GPQA).

https://platform.openai.com/docs/guides/reasoning

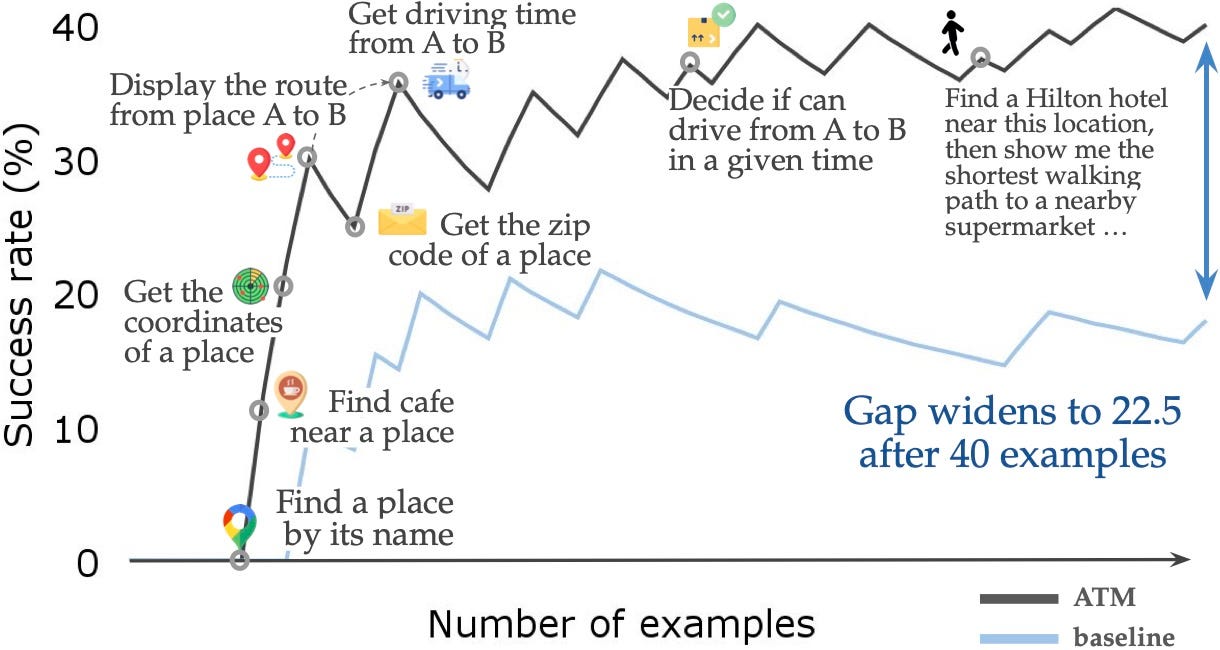

Project: Agent Workflow Memory

Agent Workflow Memory (AWM) introduces a way to integrate and utilize workflows in agent memory.

Workflows are common task-solving subroutines. AWM operates in two modes: offline, where agents learn from labeled examples, and online, where agents learn from past experiences in real-time.

https://github.com/zorazrw/agent-workflow-memory

Project: Ell

Ell is a lightweight functional prompt engineering framework that treats prompts as programs, not just strings.

It offers tools for version control, monitoring, and visualizing prompts. It supports processing and generating multimodal data like text, images, audio, and video.

https://github.com/MadcowD/ell

Project: DataGemma

Google's DataGemma is a set of fine-tuned Gemma 2 models that help large language models access reliable public statistics from Data Commons.

DataGemma RAG uses Retrieval-Augmented Generation, while DataGemma RIG uses Retrieval-Interleaved Generation to help models understand and answer natural language queries.

https://huggingface.co/google/datagemma-rig-27b-it

https://huggingface.co/google/datagemma-rag-27b-it

Project: Chronos-Divergence

Chronos-Divergence-33B is a unique model based on Chronos-33B, focused on role-playing and story writing prompts.

It’s trained on 16,834 tokens and can handle about 12,000 tokens without degradation, without using RoPE or similar techniques. It avoids repetitive phrases and plans to implement Grouped Query Attention (GQA) for memory optimization.

https://huggingface.co/ZeusLabs/Chronos-Divergence-33B

Project: PresentationGen

PresentationGen is a SpringBoot web app that generates PowerPoint presentations using large language models.

Users can quickly create customized PPT files, with support for template selection and content replacement, making it suitable for various presentation needs.