Discover more from Strength In Numbers, the newsletter

What 2020 taught us about polarization and the polls 📊 November 15, 2020

Campaign events don’t matter as much, but error from non-response could be higher

Thanks as ever for reading my weekly data-driven newsletter on politics, polling and the news. As always, I invite you to drop me a line (or just respond to this email). Please click/tap the ❤️ under the headline if you like what you’re reading; it’s our little trick to sway Substack’s curation algorithm in our favor. If you want more content, I publish subscribers-only posts 1-2x a week.

Dear reader,

I hope you have caught up a bit on your election-week sleep deficit and are looking forward to the Thanksgiving holiday, however isolated it may be in an era of covid-19. I had to cancel a trip home but am trying to make the most of it by focusing on writing my book. But by way of procrastinating…

I have had some time to reflect on our presidential election forecast since Biden was declared the winner last Saturday, and while I am mostly satisfied with how our model worked I do have a few mea culpas. I want to highlight one of them today, on how political polarization changes the way we forecast elections. The bottom line is in the subtitle: that events should have smaller impacts over campaign vote-intentions, but error from partisan non-response in who answers the polls could be larger.

Be well,

—Elliott

What 2020 taught us about polarization and the polls

Campaign events don’t matter as much, but error from non-response could be higher

Recall that I spent a good portion of 2020 arguing — sometimes in a rather high-profile way — that political polarization had fundamentally changed the way we should forecast election, in two ways. First, political science has shown that polarization clouds partisans’ interpretations of both economic growth and the incumbent’s successes/failures on the economy. A recession these days won’t have the same impacts on the incumbent president’s vote share that it would have in, say, the 1970s.

Second, I argued that polarization decreases variance in the polls over the election campaign. There is a strong empirical relationship between the share of swing voters in the electorate and the net change in the polls from 300 days before Election Day to Election Day itself. Now, with our historically low levels of swing-voting, candidates should end the campaign roughly where they began, with some room for error (turns out, about 10 points on two-party vote share).

I stand by both of these assessments. Partisanship still seems to be an an all-time high, as measured by low levels of self-reported swing voting in pre-election surveys, and Joe Biden’s support in the polls didn’t budge by more than a point or two between March and November.

But recall also that there are two types of polling error when forecasting an election. First, there is the error from the chance that things could change between the day on which you’re forecasting and Election Day. (We have already covered that error.) Then, there is the chance that polls are biased and underrate candidates’ numbers — systematically or otherwise — on Election Day. And while I thought hard about how polarization changes the landscape of uncertainty on the first type of error, I did not devote much time to how it could change systematic error on Election Day.

…

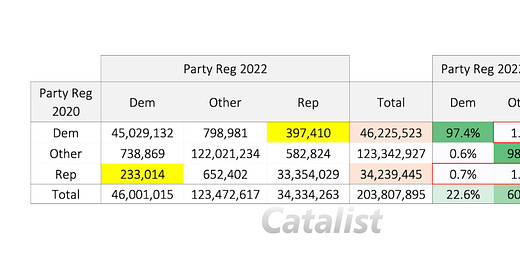

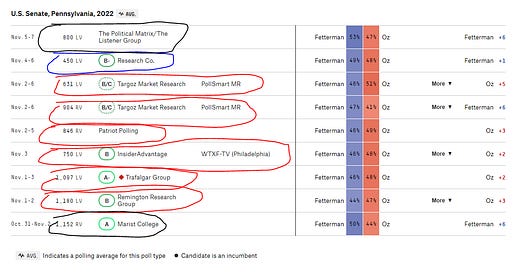

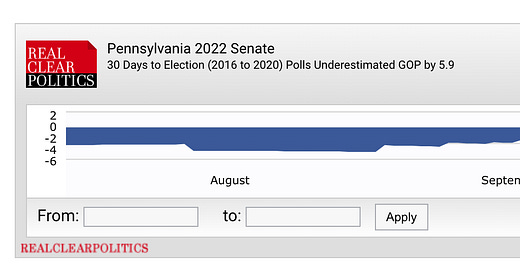

After the fact, this seems like quite the obvious oversight. Some of that assessment is surely hindsight bias, since the polls overestimated Joe Biden in pretty much every state. But we also could have known it was coming. The polls this year overestimated Biden in the same states, and by similar amounts, as they overestimated Clinton in 2016. The error in the polls has been correlated rather strongly across election cycles, which even while rare is rather concerning. (Indeed, it is made all that much concerning because it is so rare.)

Now, there are a few explanations for this error. One could be that the polls are missing people who do not trust institutions, their neighbors or even the pollsters, and that those people are disproportionately likely to vote for Republicans in ways that standard demographic weighting cannot fix. Another is simply that we’re inventing correlations where an intervening variable is to blame; Maybe Trump beat the polls in 2016 because of the Comey letter and in 2020 because of a Republican turnout advantage that wasn’t factored into likely voter screens, and the systematic biases to eh industry are not so serious.

But consider polarization, and party leadership, as another explanation. Because we are now so prone to bending our opinions to the wills of our party leaders, there is a distinct chance for officials to bias polls in, or again, their favor even if its unwitting. Maybe the reason that polls underestimated Trump in both 2016 and 2020 is because he has constantly told his supporters that pollsters are untrustworthy, that polls are tools for the mainstream media to bias political narratives against them or even that they are tools for voter suppression. Or maybe, less nefariously, his campaigning against elites simply spilled over to public polling too.

Whatever the explanation, it seems to me that polarization might also increase the chance for systematic biases in the polls. As a forecaster, that is something I will have to keep in mind going forward. Pollsters will also have to keep it in mind as they refine their methods to fix this year’s blow — the latest in a series of misfires that have tarnished the industry’s reputation, whether it deserves it or not.

…

Relatedly, the results of the election have spurred an abundance of think-pieces about how polls are “useless” or that election forecasters face an “existential threat” posed by polling inaccuracy. I think that most of these articles are hyperbolic, an overreaction to poorly calibrated expectations of how good polls can be. It’s also worth taking into account that many of the takes to this effect are penned by people who are critical of polls no matter how good they are.

Yes, it is concerning that errors this year are a bit larger than average, and almost uniformly in Trump’s direction. But a four-to-five percentage-point error is not the fatal misfire that some have made it out to be. For more, see the subscribers-only post linked below.

Posts for subscribers

November 15: Lower your expectations for the polls. They are subject to many sources of error and aren’t as precise as you think

What I'm Reading and Working On

I’m busy writing my book on polls for the next few months so won’t be doing much reading. However, I did pick up a copy of Robert Putnam’s new book, The Upswing: How America Came Together a Century Ago and How We Can Do It Again, and am looking forward to reading it once I get the chance. I am a big fan of his sweeping sociological analyses of our politics and society.

Thanks for reading!

Thanks for reading. I’ll be back in your inbox next Sunday. In the meantime, follow me online or reach out via email if you’d like to engage. I’d love to hear from you. If you want more content, I publish subscriber-only posts on Substack 1-3 times each week.

Photo contest

Wendy sends in this photo of Paco & Lucía, “siblings who are named for the Spanish guitarist Paco de Lucía.” I had never heard of him before but this is a beautiful song.

For next week’s contest, send me a photo of your pet(s) to elliott[AT]gelliottmorris[DOT]com!

a few years ago I worked on an ML powered application that was distributing tv ad money across networks and programs. TV ads dont have the benefit of clickthrough data. instead, we used correlations like: people who watch Friends buy Nissan SUVs and shop at natural markets. they tend to go on roadtrips instead of taking the plane.. and the like. I'd try to use to correlate voter data with lifestyle preferences and from there figure out which slices are missing from poll samples.

Have folks tried to do backwards looking polls to try and quantify the selection bias? One could imagine a very simple experiment where you take a poll of known voters during '16 (so that you don't have to deal with flawed turnout estimates) and try and recover the precinct level or county level vote shares. From there you should be able to get a nonresponse estimate, assuming you mitigate partisan social desirability bias (using randomization or some other technique).